With this momentum, 2023 is certain to continue to advance the digital revolution. For reality capture and 3D modeling, surveying and mapping, lidar, and AEC digitization technologies, the tools and workflows seem to be on the precipice of major change - or already across a threshold.

Registration is Open for Geo Week 2024!

February 11-13, 2024 | Colorado Convention Center | Denver, CO, USA

While it is difficult to narrow down a list among the many tools and technologies, a few key themes have caught our attention at Geo Week, and we intend to follow them closely in 2023 and beyond - both at the upcoming Geo Week Conference and through our continued coverage here on Geo Week News.

1. Insights from AI and Machine Learning

When technologists first began to forecast trends in AI, they treated it as a stand-alone technology that perhaps could one day achieve sentience - but the applications for AI are typically much more straightforward and far-reaching. Replacing the need to do repetitive, time-consuming parts of data processing, trained systems can help to free up personnel for tasks that benefit from human intervention. When it comes to geospatial data, AI and machine learning are already being put to work - from training tools to pick out and identify/classify portions of point clouds, to helping identify trends and deviations in large-scale datasets.

What’s on the horizon are applications that move beyond simple image classification, however. By employing machine learning to infrastructure sensors, for example, complex models of traffic flow can be analyzed to help make smarter urban development decisions, or to simulate the effects of a traffic pattern change. AI is also being used to contribute and create simulated data that can help with prediction, providing users with tools to not only react to past events, but to help to target limited resources where they may most be needed.

2. Leveraging Game Engines in Geospatial Applications

As the popularity of video games has surged in the last decade, so too have the graphic engines that power them. Companies like Epic Games, with their Unreal Engine, and Unity have developed incredibly realistic 3D modeling tools, and we’re already seeing unlikely partners with geospatial companies, including Esri and Cesium. Looking ahead to 2023, it feels like a given that these types of partnerships are only going to expand, both in the sheer number of them in existence as well as with the capabilities they provide.

Those capabilities are quite impactful, too. Although 3D visualization has always been a more valuable tool than 2D, the capabilities simply haven’t been there to maximize that value with large-scale geospatial data. These game engines can change that. These tools work particularly well for larger-scale models on city levels, or larger, such as with Presagis’ 5D - a digital twin platform which combines the power of the Unreal Engine with that of GIS platforms like ArcGIS. With these improved 3D visualization capabilities connected with real-world geospatial data, professionals will be able to get much more valuable insights by running realistic simulations within properly scaled visuals, and will be set up for integration with other emerging technology, like augmented reality and virtual reality, and perhaps eventually the metaverse.

3. Bringing Datasets Together, Seamlessly

As new modes of data collection mature (including collection from satellites, mobile devices, new sensors and UAVs), it is becoming rarer that projects include only one type of data collection. Having to bring these different datasets together has sometimes required a patchwork workflow of exporting and importing data in and out of different applications, which is at best time-consuming and difficult, and at worse, devalues the additional data because it takes so much work to bring it all together. New workflows and a general willingness to “open” formerly proprietary software platforms (at least to APIs or other built-in connections), is helping to change that. Several sensor manufacturers and software companies have committed to promoting interoperability, and there is also gathering momentum for standards and common data environments to be built.

One recent example is a project by the Port of London Authority to digitize their assets, which include miles of shoreline, critical marine infrastructure like flood control and security structures, commercial docks and even famous landmarks along the Thames - notably London Bridge itself. By using a software that could federate multiple types of datasets - from bathymetry to photos to lidar point clouds - in one place, they are now able to get a more comprehensive view than ever.

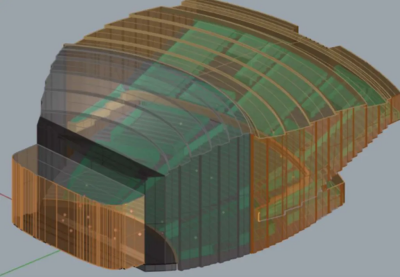

4. Using Advanced Visualization and AR/VR in AEC projects

This certainly isn’t the first year in which advanced visualization technology, and more specifically augmented reality (AR) and virtual reality (VR) are being touted as a trend to watch, but we might finally be seeing it ready to take off in enterprise settings. The jury is still out on some of the social applications, but for the AEC industry in particular these types of advanced visualization tools are already in the early stages of returning tremendous amounts of value, and with both the hardware required to utilize these tools along with the modeling and capture processes for creating the 3D visuals rapidly improving, 2023 looks like the year AR and VR goes from theoretical to practical in wide circles.

The use cases for these visualization tools have the potential to touch a variety of pieces of the AEC project lifecycle, too. For the design phase, architects and engineers will have a more realistic – and interactive – “single source of truth” with which they can refine their design and spot potential clashes much earlier in the process. Collaboration in these phases are also improved in this era of rapidly increasing remote work, with VR in particular providing the opportunity for an interactive collaboration experience even being in different physical locations. Once a project is started, AR can be used to implement 3D designs onto the physical world to detect potential issues before building in that specific area has begun, avoiding potentially costly delays, while also being available as a preliminary training tool for new employees without shutting a job site down. These tools have long been more hype than tangible value, but that tide is starting to change, a development which will only accelerate into 2023.

5. Calculating Carbon and Climate Impacts

Construction projects account for anywhere from 25% to 40% of global carbon emissions. This number includes everything from using fossil fuels on-site to the impact of mining or harvesting raw materials necessary to complete a project. By understanding the carbon footprint of buildings, we can identify opportunities to reduce emissions and make more sustainable choices in the design, construction, and operation of buildings. The digitization of construction and AEC workflows has helped to pave the way for this increased understanding. As BIM becomes more detailed, and real-time data gets integrated into digital twins, it is becoming less daunting to measure an asset’s impact.

Several major companies, including Bentley Systems, have been looking at integrating carbon calculating tools into their design tools, helping owners and designers make better decisions before breaking ground. By calculating the carbon footprint of a building, stakeholders and designers can identify the major sources of emissions and evaluate strategies for reducing them. For example, construction firms might look at using low-carbon building materials, increasing energy efficiency, or using renewable energy sources for heating, cooling, and electricity. These carbon-counting tools, such as EC3, are now easier to integrate because we are getting better baseline information both from new structures, and the assessment of as-builts. It is possible that going forward, carbon assessments will be as common as material bids and cost estimates in design proposals.

6. Deriving Value from Digital Twins Through Operational Lifecycle

It wasn’t long ago that the term “digital twin” could be seen as a possible emerging trend, with some disagreement over whether or not it was simply a buzzword or a tangibly valuable tool. Although there is still some basic disagreement over the exact definition of the term, digital twins are an accepted value-add for any construction progress. Most agree the key differentiator between a digital twin and BIM model is the former being incorporated with some sort of real-time sensors, which isn’t always utilized to the maximum extent possible. Right now, these tools are mostly used during the design and build stages of a building project, but moving forward we’d expect to see more and more operators and owners taking advantage of digital twins throughout the operational portion of a building’s life cycle as well.

Those real-time Internet of Things (IoT) sensors unlock a significant amount of potential value for building owners and operators. Tracking anything from key physical elements of a building to tracking movement and sound, owners have more information than ever before readily available to make key decisions. In many cases, this can come down to cost savings on things like maintenance, with sensors notifying operators that some key piece of infrastructure – say, a pipe – needs maintenance well before it would be detected by humans, significantly reducing the cost to fix. Things like tracking noise and people’s movements can also be combined with readily available artificial intelligence tools to run simulations and determine strategies to more efficiently run places like schools, hospitals, malls, and other high-population spaces. The tools to track key information about a building in real-time already exist, and 2023 should be the year they are properly leveraged.

7. Expanding 3D Capture’s Accessibility Through Mobile Tools

The value of reality capture for a number of different industries is certainly not a secret, but for a long time it has been much easier said than done. Whether it was the price, the size, or the complexity, many of the traditional reality capture tools were inaccessible for a large portion of the population. This past year saw more strides in expanding that accessibility, and 2023 should see that expansion happen even more rapidly. Specifically, the ability for mobile devices to perform 3D capturing and modeling should become more widespread, and more popular.

Apple got the ball rolling in terms of implementing this technology into the phones we use every day when they introduced lidar sensors on several of their iPhones in 2020, but the usability beyond being a novelty lagged. The accuracy of these tools hasn’t quite been up to snuff for most use cases, but that is quickly beginning to change, a development that should only accelerate in the coming year. Companies like Matterport are taking advantage of these capabilities, and we’re starting to see more iPhone apps that can take advantage of the technology in genuinely useful ways. That a well-established laser scanning company like FARO is getting into this space with their recent acquisition of the SiteScape only further signals that it’s an area with growth on the horizon that has our attention.

8. Automating Reality Capture Workflows

Automation is going to make its way into any future-looking technology article, with a variety of different ways that term can be bent. With reality capture, though, tools are starting to come out to perform these tasks without human intervention, a trend that should accelerate in the coming year. Whether it be drones completing automated missions or mobile ground robots, like Boston Dynamic’s Spot, walking through sites for reality capture, the hardware is arriving to make what once seemed like wishful thinking reality.

The impacts of being able to complete these reality capture projects automatically are significant, too. For one thing, industries like construction which take advantage of reality capture technology are dealing with well-publicized hiring shortages, stretching them thin. The ability to pass off tasks like reality capture to an automated piece of hardware, whether it be a drone or a ground robot, frees up time for available workers to complete other tasks which cannot be automated. Further, automation opens the door for more reality capture projects that were perhaps unsafe for humans. Full automation is still in its nascent stage, but with recent developments from companies like Skydio, Exyn, Trimble, and Leica, it’s clear we’re reaching an inflection point with 2023 the year we could start getting to the other side.

9. NeRFs and Beyond

While techniques for creating 3D models have continued to evolve, a new method emerged in 2022 - and we are only beginning to see its impacts. NeRF, or Neural radiance fields, is a new technique for creating 3D scenes using machine learning. First introduced in an academic paper in 2020, NeRFs became popularized in 2022 when NVIDIA announced ‘Instant NeRF’, a software tool that allowed users to generate high-quality 3D renderings of scenes using the NeRF (Neural Radiance Fields) method, with far fewer images than photogrammetry would typical require.

While both methods can be used to generate 3D models or renderings of real-world scenes, they differ in terms of the input data they require and the way they generate the final output. Photogrammetry typically requires a larger number of input photographs and relies on specific techniques to extract 3D information from the images, while NeRF can generate 3D renderings from a smaller number of input images and relies on machine learning techniques to learn a continuous representation of the scene. While these techniques may not have reached their full potential, and are still experimental - it may become a tool for quick visualization from relatively scant source material - opening up new potential applications.