Image: Taubin Lab/Brown University

Gabriel Taubin, head of the research team, says that the technology may be a significant step toward “making precise and accurate 3D scanning cheaper and more accessible.”

Brown researchers have developed an algorithm that enables smartphones and off-the-shelf digital cameras to act as structured-light 3D scanners.

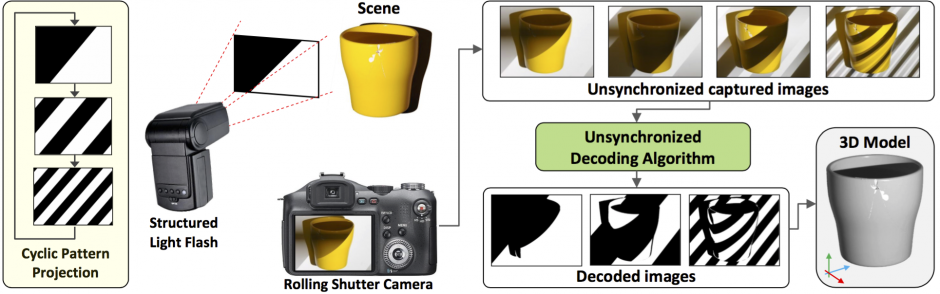

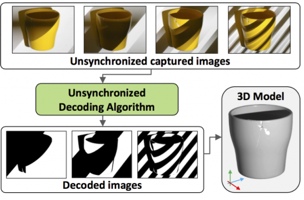

The structured-light method is used by many of the less expensive scanners on the market, such as the Kinect. It works by projecting light patterns onto an object, measuring how the object deforms the light, and then using those measurements to determine the 3D shape of the object. The method as it exists today is relatively cheap, but there is still one part that makes the whole process pretty expensive: hardware that synchronizes the projector and the camera.

Taubin’s new algorithm enables users to perform structured light scanning without synchronizing the projector and the camera. This means that an off-the-shelf camera or smartphone can be used to perform the process with nothing more complicated than a special flash.

Why that’s a Big Deal

“The problem in trying to capture 3-D images without synchronization,” Brown’s official statement explains, “is that the projector could switch from one pattern to the next while the image is in the process of being exposed. As a result, the captured images are mixtures of two or more patterns. A second problem is that most modern digital cameras use a rolling shutter mechanism. Rather than capturing the whole image in one snapshot, cameras scan the field either vertically or horizontally, sending the image to the camera’s memory one pixel row at a time. As a result, parts of the image are captured at slightly different times, which also can lead to mixed patterns.”

When the patterns captured by the camera are overlapping or incomplete, it creates a warped 3D scan. Which, as you know, defeats the whole purpose of collecting a scan in the first place.

How the Algorithm Works

- The camera captures a burst of images (your iPhone can do this).

- The algorithm reads binary information embedded in the light pattern to determine the timing of each photo in the burst.

- The algorithm “goes through the images, pixel by pixel, to assemble a new sequence of images that captures each pattern in its entirety.”

- Perform a standard structured-light re-construction process, resulting in a 3D image.

What’s Next?

The researchers found that this method was at least as accurate and precise as synchronized systems, but significantly cheaper. They envision creating a structured-light projector that could be attached to any camera.

In an age where it seems like every new advancement involves some kind of crazy hardware that will be devilishly difficult to manufacture, it’s nice to see a solution that uses hardware we already have available. That’s the true path to making scanning available to everyone.