RealityCapture is one of the most popular platforms for users to create 3D models from scans and images in photogrammetric workflows. Formerly part of Capturing Reality, the platform was acquired by Epic Games in 2021 and has since been integrated into the latter’s Unreal Engine. It’s used by a number of different industries, including historical preservationists, surveyors, VFX artists, and more, and is applied to everything from large sites to individual assets. Just last week, they teased the latest release of the platform, which includes new features as well as a rebrand.

In a video put out on June 3, Unreal Engine unveiled new capabilities for the software as well as the announcement that it will be moved under the RealityScan umbrella. While they don’t give a reason for the rebranding, one would imagine part of the logic is that the term “reality capture” is becoming more ubiquitous throughout the industry, so having a product with the same name is increasingly likely to cause confusion. As for the new “RealityScan 2.0,” there is no precise release date as of yet, with the teaser video simply saying it will be available “soon.”

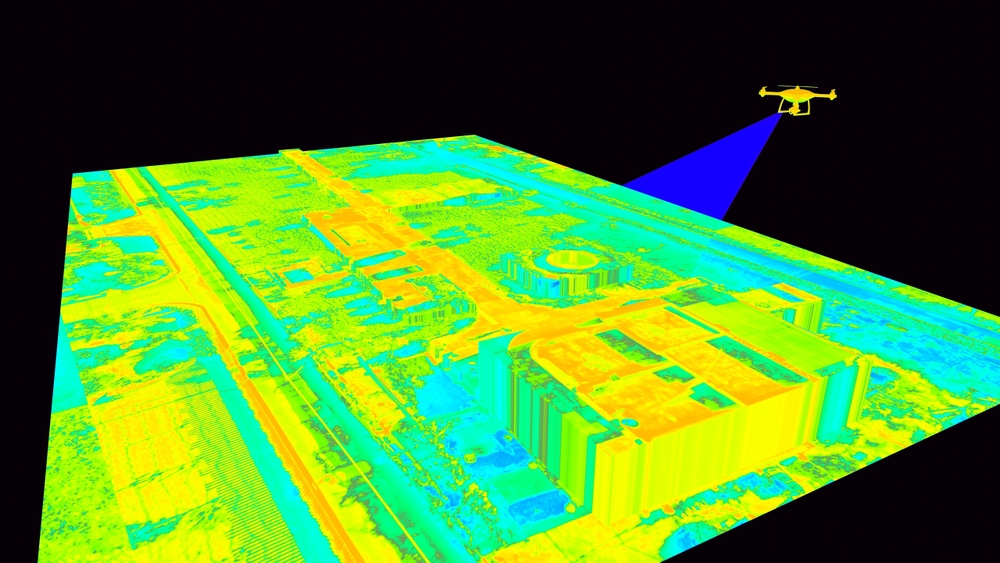

In terms of the new features, the most notable for our purposes is certainly the new support added for aerial lidar. Using imagery from Pete Kelsey’s project of scanning Alcatraz, which he spoke about at Geo Week 2025, they note that the latest version of the software will allow for the creation of “precise orthographic projections, maps, and 3D models,” thanks to new support for combining imagery and terrestrial scan data with aerial scan data. This now opens the platform for usage on larger-scale projects for those who want to combine colorized data from imagery and scan data. They note that this is particularly useful for those looking to work toward mapping and simulation projects, among others.

Along with the additional support for more lidar sources, the new update will also introduce AI masking, which is a major upgrade for those who are creating 3D models for smaller assets. Thanks to a new trained segmentation model, users can remove backgrounds from a scan to create a standalone model for a single asset, removing a tremendous amount of tedious manual work. In the release video, they use the example of an apple sitting on a table. Normally, a user would have to work to remove the background scenery from the model to get a standalone apple, but the new RealityScan 2.0 has built-in capabilities to retrieve their desired asset.

Similarly, improved algorithms have improved the default settings for image alignments, particularly on surfaces with minimal features. Generally, this process takes a lot of manual iterations to ensure smooth features for an asset, but work under the hood is minimizing this work in the latest update. Furthermore, they have added new quality analysis capabilities to ensure that scans are complete enough to create a model. With new visual heatmaps within the app, a user can see where they may need more data before creating their ultimate mesh.

For geospatial professionals, these upgrades mark a real expansion of what can be done within everyday workflows. The addition of aerial lidar support in particular signals a shift toward more holistic data integration within a single platform, making it easier to move from raw capture to deliverables like maps, orthos, and textured 3D models. Combined with automated tools like AI masking and improved image alignment, the new release reduces friction in both field and office work, theoretically freeing up time for higher-value tasks or opening up time for more projects. In an industry where precision matters and project timelines are tight, these kinds of updates are another sign of the industry looking for ways to combine data and automate tedious tasks.