The development of the autonomous vehicle space has taken large steps in recent years, but the realization of the technology has not necessarily progressed to the extent some would have hoped by this point. We are still years away from mass commercial adoption. There are still many barriers preventing that mass adoption, and while further development within the vehicles themselves needs to, and will, continue, some of this process can be expedited with support from other places. Companies are already starting to provide this support, with Seoul Robotics being a prominent example using their 3D technology within infrastructure to add a layer of assistance in certain areas.

Now, researchers in Japan are working to automatically determine road features from point cloud data, which they say could “become indispensable in autonomous driving and urban management.” The research is being led by Professor Ryuichi Imai of Hosei University, and it works with the large amounts of point cloud data collected in Japan using mobile mapping systems and terrestrial laser scanners, accumulated for public works. The researchers note that the data has limited use in its unprocessed state, but have developed a plan to develop a deep-learning model to identify road features automatically. The work was presented at the Joint 12th International Conference on Soft Computing and Intelligent Systems and 23rd International Symposium on Advanced Intelligent Systems on November 29.

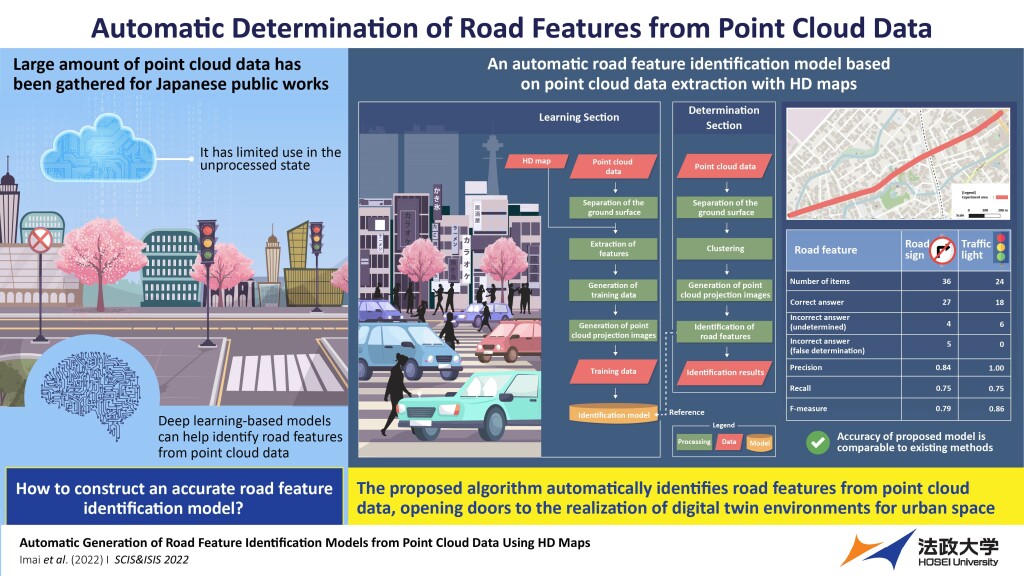

The algorithm developed by Professor Imai and his team requires a two-step process, starting with a learning section to “teach” the model to identify these features, such as road signs and traffic lights, before it can make these determinations automatically. While previous methods for extracting these features required HD map data, limiting the approach to developed sections of road maps, the deep learning method is proposed to overcome those barriers. The algorithm first separates the ground surface from the point cloud data, before area data was generated from the HD map to extract component points of features. After the points were labeled as either road signs, traffic lights, or others, the data was used to generate training data, which was used to construct the identification model.

Putting the framework to the test, the research team used a road in the Shizuoka Prefecture containing 65 road signs, 46 traffic lights, and noise features over a 1.5 kilometer (0.9 mile) stretch. The results were fantastic, with the team finding “that the precision, recall, and F-measure were 0.84, 0.75, and 0.79, respectively, for the road signs and 1.00, 0.75, and 0.86, respectively for the traffic lights, indicating zero false determinations,” giving this proposed model higher precision than existing models. The implications of these results could be wide-ranging as more accurate data of roads will be needed for autonomous driving as well as more powerful urban planning methodologies, including digital twins, and for making road inspections much more efficient.

On the proposed method, Professor Imai said, “Currently, people need to visually check the point cloud data to identify road features as computers cannot recognize them. But with our proposed method, the feature extraction can be done automatically, including the features at undeveloped road map sections.” He’d add, “A product model constructed from point cloud data will enable the realization of a digital twin environment for urban space with regularly updated road maps. It will be indispensable for managing and reducing traffic restrictions and road closures during road inspections. The technology is expected to reduce time costs for people using roads, cities, and other infrastructures in their daily lives.”