Google’s Maps platform is changing quickly. In late October of this year, Google announced multiple new features that target different user groups. As Google Maps is used for different purposes by different user groups, Google tries to serve their different needs by adding new features or extending existing ones. Finally, Google’s new features offer insights into how the company uses AI and AR technology to offer a better user experience to Google Maps users.

Better road navigation

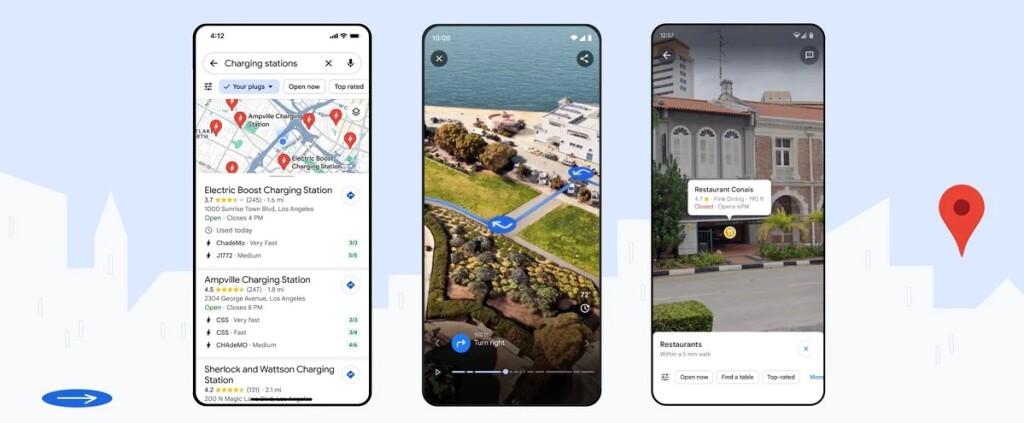

Many drivers use Google Maps as a navigation tool. New location-based services inside Google Maps help drivers travel more safely and relieve their stress, for example, helping EV drivers find a charging location ahead of time that meets their needs. This is a new, global feature available for EV drivers on Android and iOS that tells them if a charger is compatible with their vehicle, its recharging velocity, and when it was last used. As this service is dependent on data availability, EV charging information will be made available to developers on Google Maps Platform in the Places API so more companies can start to share this data online.

Google Maps navigation map now includes more realistic buildings that help driver to orient themselves better, while improved lane details help them prepare lane changes before exiting a highway. In the US, Google Maps will display HOV (high-occupancy vehicle) routes, while in Europe Google will offer AI-powered speed limit information to 20 countries. This means that Google Maps has all the necessary speed limit information it needs, without a driver having to rely on physical speed limit signs that may be hard to spot for a variety of reasons. How Google uses AI and imagery to keep its speed limit data updated is explained here.

Immersive 3D fly-throughs

Announced earlier this year, Immersive View for routes is a Google Maps feature offering 3D “fly-throughs” of a pre-defined route, showing the user the entire route including all turns presented in a realistic, 3D model created using AI to fuse Google street view and aerial imagery. These are combined with traffic and weather simulation data (including air quality data), so users know what to expect in real-time before taking a trip. This feature will be available for a selected list of cities including Amsterdam, Berlin, Dublin, Florence, Las Vegas, London, Los Angeles, New York, Miami, Paris, Seattle, San Francisco, San Jose, Tokyo, and Venice and can be used for planning driving, walking or cycling trips.

Categorized location-based searching

Google has also redesigned how to present Google Maps users with location information based on written location searches. A general location search such as “Things to do in Paris” will now generate multiple categories of indexed search results, instead of unfiltered and uncategorized results. By presenting search results in a more organized way both on the map and Google’s text-based user interface, a user can refine an initial, general search based on more specific search terms. This results in a better user experience with fewer, but better search results and a less cluttered map.

Google Maps also found a new way to combine imagery and location-based searches. Using location-tagged images and their descriptions, you can find out where to find where an item (say, a dish or beverage of your choice) is sold and how it looks like: a simple, text-based Google search term will now generate a map showing images of the search term and the location where it can be found. This option enables you to locate a restaurant or shop directly on a map using a single search term that relates to a product name or menu item, instead of searching for a restaurant or shop by its name. The idea is that this will help find a location where to find an item through a single Google search.

According to this source, Google uses AI and advanced image recognition models to classify images shared by Google users and that are displayed on the map to the those using this new feature that has been implemented in six countries at the moment. To help Google Maps users understand their direct surroundings better and display location-based information on top of a mobile phone camera view using AR and AI, the existing feature Search with Live View has been integrated with Google Maps. It has also been renamed Lens and is available in 50 cities worldwide.

General availability of Photorealistic 3D Tiles, 2D Tiles, and Street View Tiles

After announcing the preview availability of Photorealistic 3D Tiles through Google’s Map Tiles API, Google has now moved Photorealistic 3D Tiles, as well as 2D Tiles and Street View Tiles into general availability. This launch stage means that products and features are "production ready," though not always universally available. 2D Map Tiles and Street View Tiles are meant for developers using non-JavaScript environments and offer the same data and user experience as Google’s Maps JavaScript APIs and Street View Service API. Photorealistic 3D Tiles have been used in different ways by developers to create visual map experiences. This blog post includes an overview of different use cases (including real estate and urban planning, AR, and architectural design) and how developers can get started building their own map experiences using Photorealistic 3D Tiles.

.jpg.small.400x400.jpg)