So, the first thing I learned today is that, inside the Department of Defense, STTR stands for “Small Business Technology Transfer.” And people wonder why the federal government is considered rather opaque.

I discovered this after stumbling across a solicitation through the Small Business Innovation Research portion of the DoD’s Resource Center, calling for “Real Time 3-D Modeling and Immersive Visualization for Enhanced Soldier Situation Awareness.” That’s the kind of title that makes my Google alerts blow up.

But what is it exactly they’re looking for? Let’s see if I can unpack this:

Develop real time 3-D mapping, localization and immersive visualization algorithms and processing technologies to enable seamless and automated real time processing of video and 3-D point cloud data collected from moving air and/or ground platforms and construction of high fidelity, textured, geo-referenced 3-D models of the environment. Demonstrate capability to fully integrate video and point cloud/depth data from multiple sources to provide a globally aligned, consistent and geo-referenced 3-D textured map of the environment with automated detection and alert generation of moving objects and/or stationary objects of interest based on user defined features/characteristics.

(First of all, can we PLEASE stop writing 3D as 3-D? Please? Do you people still hyphenate email? If you’re going to hyphenate anything in that paragraph, it should be “real-time,” since it’s a compound modifier of the gerund “processing” …) Anyway, it would appear the Army would like it if they could fly over a place with lidar, have soldiers on the ground with streaming video, and have lidar on vehicles, all streaming data back to a central database and combining to create a georeferenced model that’s continuously updated.

Oh, and while you’re doing that, smash in some command-and-control functionality so that the Army will not only be notified of moving targets with alarms, but also be alerted to targets that aren’t even moving, based on automatic object recognition.

Oh, and by the way, you probably won’t have GPS available. And the solution has to be open architecture and non-proprietary.

No problem, right? Well, cool, get your submission in by Wednesday, March 28, because that’s when the solicitation closes.

Seriously, though, is this even remotely possible with current technology? It’s pretty cool to think about all the potential pieces of current solutions that would have to be bolted together to make it work. It’s also pretty cool to read the way guys from the Army write: “Soldiers within the Future Force and those in the current force engaged in combat operations must be able to quickly reduce opposition within urban and complex GPS-denied environments while minimizing friendly force casualties.” By capitalizing “Future Force,” does that indicate that there’s actually a division of the Army called the Future Force, and they’ve got all the coolest new technology? Like the Rangers, but everyone gets issued a jetpack or something? I suppose they can’t just say, “it’d be sweet if we knew exactly where all the bad guys were right away so we could kill them really easily without killing anybody we didn’t want to kill. Or, ya know, capture them, we guess.”

Sorry, I keep getting distracted. It’s a Friday and the sun is shining. Makes me a bit slap happy. So, what do we need here? First, we need all the lidar folks to export in the same data format, in real time. Does the e57 file format get that done? Seems like it. Then, all the people working on videogrammetry have to make sure they’re creating point clouds in the same format after their processors work their magic. When Bentley talks about the point cloud as a fundamental data point, they’re not kidding. As long as all the information can be converted in real-time to x/y/z points, with all other data associated with independent points, you’ve got something to work with.

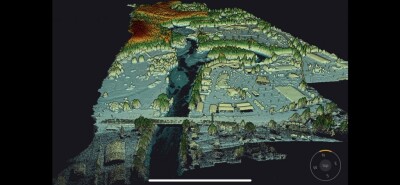

Are you flying and driving thermal, too? Great, associate all the thermal data with x/y/z points (yes, there are huge problems here with resolution and the overlay of the streaming thermal video onto the point cloud that’s being created by the lidar, but that’s where the smart people come in). This gets you pretty quickly to a real-time creation of a map of your surroundings as long as you can visualize those points in real time with something like Pointools or the Alice Labs technology – the big thing is having an engine that can visualize all of the points in an on demand fashion, using something like the octree format that lots of people have moved to, but which was most recently talked about Faro with their Scene 5.0 architecture, so it’s top of my mind. You’ve got to store all of those points immediately, then call them up for what the particular soldier wants to look at, plus have some way of decimating in real-time to show the larger picture map of which the part the solider is looking at is a subset.

Easy-peasy.

But that just gets you the outside of buildings, plus some thermal pictures of people and hot things in buildings. What about indoor mapping so soldiers aren’t going in blind? Well, that’s where the nano quadrotors come in:

As long as you’ve got a massive supply of these bad boys with cameras and streaming ability, you just deploy them ahead of your force, blow out some windows, and they’ll be ripping through buildings and creating maps like nobody’s business. Sure, some of them will get smashed by enemy forces, but if you’ve got enough of them and they’re fast, they’ll map a lot of what you’re interested in in a hurry. (Also, if you’re interested, here are those quadrotors playing the James Bond theme. And why wouldn’t you be interested?)

Think it can’t be done? They’re basically doing it now:

So, we’re getting close to a real-time creation of a map here, but there are some other big problems. First, if we don’t have GPS, how can we make the system know where the soldier is compared to everything else? How can we mesh all the data if none of it is coming in geo-referenced in the first place? Do we have everyone huddle in a big circle at the beginning and strap IMUs to everyone and everything and hope for the best?

I think pretty big strides have been made with autoregistration that would allow the software to use common features in common images to stitch stuff together, but combining lasers and video, plus the fact that the video would be taken by two disparate soldiers in two disparate places, plus all the disparate quadrotors flying around, you’d have a pretty massive problem on your hands without GPS. I’m pretty well flummoxed there.

Not to mention the incredible processor power you’d need to bring to bear on the whole thing. The data the server farm would have to crunch through would be massive, as would the amount of data streaming back in real time (but I think some kind of daisy-chained wimax could probably handle that, and I’m sure the Army has some sweet wimax technology).

Finally, you’ve got the feature recognition and the alarms. Here’s where you can bring in some security technology, like the physical security information management technology that companies like VidSys offer, combined with the video analytics technology offered by companies like ObjectVideo, combined with the auto-feature-extraction capabilities of companies like kubit, ClearEdge, and others (though I’m not sure the Army is all that interested in piping models) – there are academic papers all over the place on it. Here’s one from the Department of Geomatics at National Cheng Kung University. Or this one from ISPRS featuring academics from the U of New South Wales and Egypt’s Benha University.

I don’t think pulling out the features for the purpose of a model makes sense, as I think you should leave everything in raw point cloud format with intelligence attached, but for identifying objects of interest, it could work.

The problem using that point cloud as the fundamental data type, of course, is that the video analytics work on, well, video and images. Even as we’re visualizing point clouds in real time, creating a map of point cloud data and avoiding modeling, we’d need to apply video analytics technology to that point cloud, basically treating the point cloud as an image. Seems doable.

See a rocket launcher? Alarm! See a tank coming around a corner? Alarm! And then track those things as they move as part of the dynamic map.

The whole exercise here is starting to make my head spin, but you can see where the parts are in place if someone can create the back end software to bring them all together. Incredibly hard, but doable? Seems like someone might want to have a go at it. Let me know if you do. I’d like to see the proof of concept…