It is true that high-definition laser scanning has been with us for over two decades. However, today’s tools and solutions for scanning bear little resemblance to what many of us began using in those early days. What is now more rightly referred to as reality capture (RC) produces far more data, by orders of magnitude—massive amounts of data in far less time. Hardware, be it terrestrial laser scanners, mobile, or aerial systems, capture rich point clouds at rates of millions of points per second.

For our continuing examination of the present state (and art) of reality capture, we began with a deep dive into how “simplicity” has become a driving force in the design of these systems and solutions. Simplicity does not mean simple, or “dumbed down”, but instead has delivered smoother and more efficient workflows without compromising quality. Our series continues with this look at the essential first step of downstream processing: point cloud segmentation, or classification (PCC).

Context from Chaos

Field software, workflow tools, and downstream processing have undergone a sea change in development to be able to keep up with this deluge of data. Higher levels of automation were an imperative; relying on traditional tools would only produce bottlenecks. Advances in automation for reality capture include in-field point cloud registration, a reduced need for targets, and has revolutionised the essential step of PCC.

“Above all, the technical achievements in lidar technology enabled the data acquisition of objects with higher resolution and shorter measurement times,” said Dr. Bernhard Metzler,

As an example, a single full dome scan of a Leica RTC360 with a measurement rate of 2 million points per second and a scanning time of one and a half minutes results in a point cloud of about 200 million points. Given this, even small projects with just a few scanning setups can quickly end up in data volumes of several billions of points. And consider mobile mapping. Hence, the challenge is the further processing of these large amounts of data: billions of points that need to be cleaned up and classified to enable meaningful analysis and modelling.

Metzler’s team is based at the famed R&D and manufacturing campus of Leica Geosystems in Heerbrugg, Switzerland. It is a kind of central research organisation serving the whole Hexagon Group, not only for systems but also artificial intelligence (AI). “We offer, essentially, research as a service," said Metzler. “If there is an idea about a new product, then we perform technical feasibility studies to show that it's feasible. And if you can show that, we hand it over to the divisions and business units for productisation.”

“For the automatic evaluation of point clouds, i.e., the extraction of the relevant information out of the large amount of data, deep-learning AI is our technology of choice,” said Metzler.

In reality capture R&D environments, PCC is referred to as “semantic segmentation" of a point cloud, where each single point is assigned to a defined object class. There is geometry - a point in space is defined by three dimensions - then there is the temporal dimension. Semantics is what type of point it is, whether it be vegetation, a wall, etc. That's the semantics; it is often considered the fifth dimension.

I was surprised to hear that Metzler and his team consider up to 64 characteristics for each individual point to identify which “classes” they fall into. Typically, these are classes that are defined for a concrete use case and can be terrain, building, vegetation, etc. for outdoor scenes, and floor, ceiling, walls, doors, etc. for indoor scenes.

Traditional Machine Learning Classification

Hexagon’s point cloud classification is based on deep learning where the point cloud is input to a neural network. However, there were simpler, and less efficient machine learning techniques used in the past. To contrast the new and old methods, Metzler offered the following explanation:

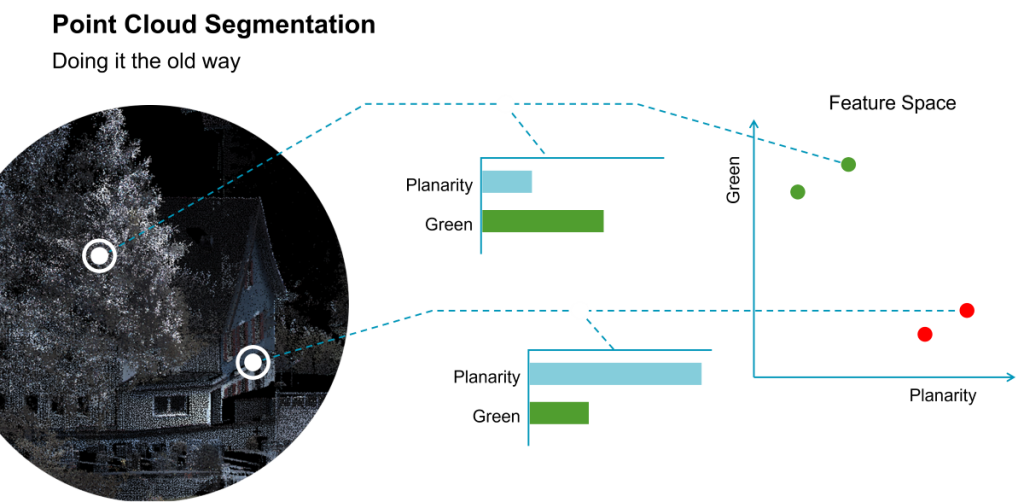

“Let me first explain traditional methods of machine learning,” said Metzler. “Traditional machine learning is based on hand-crafted features, e.g., red, green, blue, planarity, linearity, etc. In total, maybe 64 different features. For each point these features are computed, which results in a feature vector with 64 values. For example, if we made a graph of 2D feature space, with green, bottom to top, and planarity from left to right, we would expect that a point belonging to vegetation with little planarity would be in the upper left quadrant. Repeating this for many points we would expect that all of most vegetation points would cluster in that region of the graph (see Figure 1).

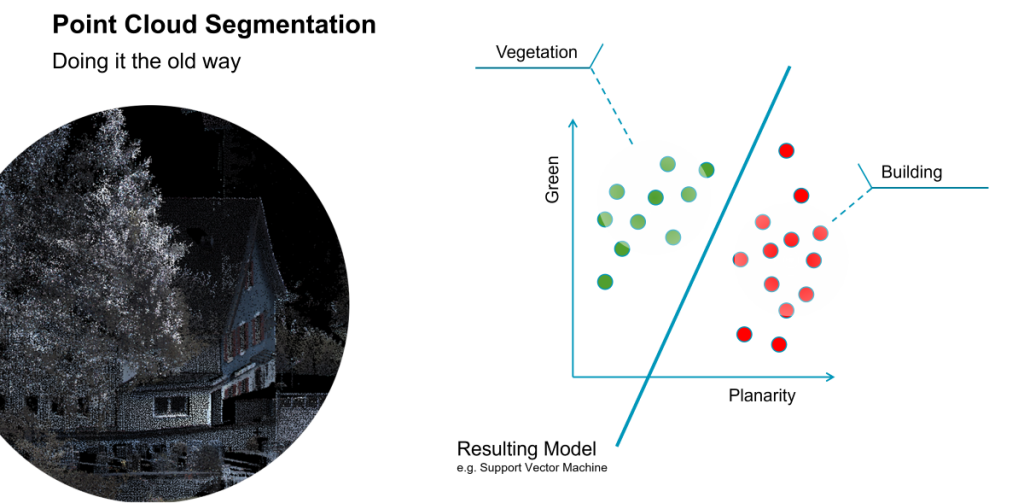

“For human labelling of training data, we know that the correct answer for each colour/planarity pair,” said Metzler. "The 'traditional' machine learning algorithm is now based on this data, deciding best where to put the clusters by drawing a 2D line. In this simplified case (see Figure 2), the line defines the model as a result of the training process.”

“When we apply this model to new data, we can compute, for example, for point features green and planarity. If it lies on the left side of the line, the resulting class is vegetation,” said Metzler. “If it is on the right side of the line, it could be a building. But in reality, we do not just have two features, but as many as 64. Then the line becomes a hyper-plane, splitting the volume into a 64-dimensional features space.”

Combinations of features could be evaluated in this manner. Per the example, green points with little planarity might be an indication for vegetation. However, colour would not necessarily add to the classification. Not all vegetation is consistently green (especially seasonally). It could also be a house, for instance, that has a green colour, or a green car, as those would have a higher level of planarity.

“We defined a set of features typical for each point and computed these,” said Metzler. “And then the algorithm had to determine, from a training process where we fed in a lot of point clouds, a correct prediction. From that, it finds out what are the important features for each class—it learns how to map input to output.”

That was how it worked in the past - machine learning at a rudimentary level.

The takeaway is that in traditional machine learning, the features and how they were recognized were hand-crafted. The model, and how to separate the features, is based on labelled training data. There were always practical limitations to such approaches. Traditional methods were very slow, could not evaluate massive point clouds very efficiently, and often required manual intervention afterwards. While many end users found the traditional classification tools from years past to be quite amazing, so seemed for instance, satellite surveying when there was only one constellation. And we know how powerful multi-constellation satellite surveying has become. The same goes for the evolution of point cloud classification.

Fast-forward to the era of super-fast and precise laser scanners. I remember trying to use some old scanner software on a large and dense point cloud captured with a present-day scanner—excruciatingly slow and incomplete. Advanced AI was much needed and has been integrated at the right time.

Self-Taught

Today, deep learning is used without handcrafted features. “In deep learning, the algorithms learn the features and the weighting. There is no hand crafting of features anymore,” said Metzler. “It becomes more difficult to explain as there is a 'black box’ element to the process.”

When it comes to these developments, the term “black box” should not be taken as a negative. "The proof is in the pudding” as the old saying goes. Or in this case, the proof is in the classified point cloud—faster and better.

Deep learning is based on neural networks. It is like a huge black box where the algorithm tends to find out on its own what the features are.

"We don't have to define planarity, linearity, or colour. The network just finds that out from the data automatically,” said Metzler “What are the relevant features? It is has got even more abstract as it is not readily apparent what the neural networks learned.”

However, it is well known what training data was fed into the solution, how well the neural network performed, how well it parsed out vegetation, houses, street furniture, construction equipment, and many other classes.

"The clue of deep learning is the idea, that these features are not hand-crafted by a machine learning engineer,” said Dr. Bernd Walser, Vice President R&D Reality Capture Division at Leica Geosystems (part of Hexagon). "But instead based on the input data, the network finds the best features and their weighting for a specific object class on its own during the training process."

"The clue of deep learning is the idea, that these features are not hand-crafted by a machine learning engineer,” said Walser. "But instead based on the input data, the network finds the best features and their weighting for a specific object class on its own during the training process. When the user runs the PCC process the input and output of their data is compared to what was learned from the input and output of the training data.”

Training data is key, and Metzler related a prime example of why. "When we were in the research phase, we trained the AI on outdoor data that was readily available. So, buildings, vegetation, etc., here in Switzerland. It was therefore very good at classifying Swiss buildings and vegetation…everything. And then we had in Las Vegas, HxGN LIVE where our product managers wanted to demonstrate this new feature. They captured some data out in the nearby street. It mistook palm trees for columns on buildings. We later took this data from Las Vegas and trained a new model and it then performed well recognising palm trees.”

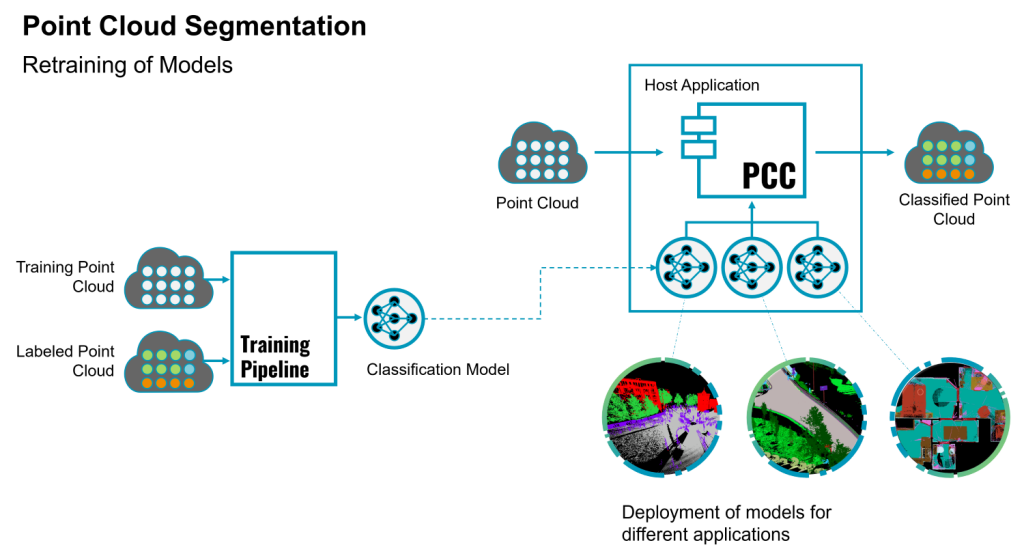

Training models is not a one-time task. Metzler said that they continually received user captured data, via their partner network, from many parts of the world. A metamodel is developed, with a human identifying what classes different example areas of the point cloud represent. The software learns from this and then can automatically assign classes. (See Figure 3.) They continue the training process, and update models in software releases.

In Workflows

The benefit of AI-based PCC is not just in saving time processing massive amounts of data. If you consider productivity more holistically, producing accurate and complete classified data will save even more time in downstream applications. The fidelity of classified data to reality is of utmost importance. AI-based PCC is not only more efficient, it can also yield higher fidelity than traditional methods.

“For instance, if the goal is to capture the interior of a building, and then with one of our other software tools to generate a BIM or CAD model, proper classification speeds that process.,” said Metzler. “It’s a help if you can identify ahead of time, what are the doors, windows, floor and ceiling. You can hide all of the other points, fit planes for the floor and ceiling, and so on.”

Removing artifacts, or as some of us call them, “ghosts”, in the point cloud is an essential step, even before full PCC. People and cars passing through the field of view of the scanner are the most common source of artifacts. Full classification can also help you remove, or simply enable turning off, classes you are not interested in or do not wish to export to downstream users. In the spirit of “map once, use many times”, filtering for relevancy is a very powerful tool.

For instance, if a mobile mapping system is being driven through a town and the goal is to identify trees for a vegetation cadastre, the ability to automatically determine the parameters of the trees, like the height or the crown diameter, is highly desirable. If we can isolate the vegetation first and then isolate the points from one type of specific tree, then a simple algorithm can automatically define these parameters. The trees can be further classified and input into a GIS. Another workgroup might be interested in the state of guardrails; how tall are they and what condition are they in? Algorithms can easily further classify them by height, and another could look for irregularities in the shape of the top rail, to identify those that may have been damaged by vehicles.

Repeating this along the whole track, kilometres of motorways can be analysed automatically in a matter of minutes or seconds, which is a significant advantage compared to a manual workflow. The possibilities are endless, but starting with clean and classified point clouds is essential for any such algorithmic magic to work.

Productisation

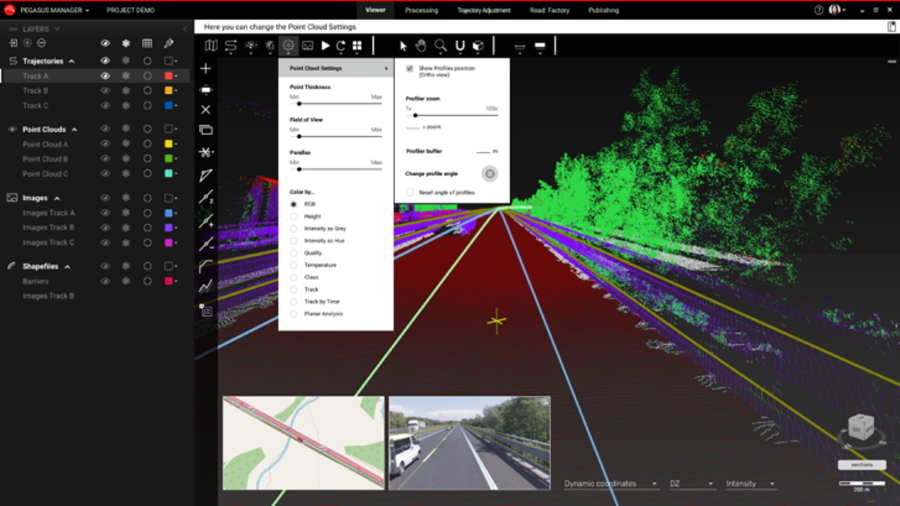

For Hexagon, AI-based point cloud classification is integrated as a feature in Leica Cyclone REGISTER 360 PLUS, and the Cyclone REGISTER 360 PLUS (BLK Edition), Leica Cyclone 3DR, Leica Pegasus OFFICE and in AGTEK REVEAL software. Walser notes that while Cyclone REGISTER 360 has elements of AI-based PCC integrated, the main functions are to produce clean, fully registered points clouds, and many customers will choose to utilise Cyclone 3DR to do more extensive PCC.

“For different use cases these software products support different models for point cloud classification operating on different sensor data and providing a variety of defined output classes,” said Walser. “There are models that support classes tailored to an indoor scenario like floor, ceiling, wall, door, window, staircase, etc. and other models for the segmentation of outdoor scans with classes like terrain, low-vegetation, high-vegetation, buildings, street furniture, etc. and models for operating on a motorway or railway scenario. Hexagon Geosystems is working on increasing its portfolio of models for even more applications to better support the needs of our customers in the field of automated point cloud processing.”

Various models created as new training data is integrated by Metzler’s team are disseminated through product releases. If someone is using Pegasus OFFICE or Cyclone 3DR, they may be using many of the same models. However, there may be different models in specific software. For instance, AGTEK REVEAL may have models focused on features of construction sites, like construction equipment and stockpiles.

Three of Three

As an old saying goes: “There is fast, there is accurate, and there is affordable. You only get to pick two.” I take umbrage with that worn out axiom. Why can’t we have all three? For reality capture, this is possible.

If you compare, by one unit of measure (points captured), laser scanning is vastly cheaper than conventional methods. Yes, it can entail a substantial upfront investment in hardware, and then software purchases/licenses, but still vastly cheaper in the long run. Data collection is fast, precisions and densities have improved, and elements of simplicity have streamlined workflows and can reduce procedural errors.

Hexagon is not alone advancing such tech. We see it in varying degrees across the industry. But thank you to Hexagon for arranging to speak with key folks in your reality capture R&D team; it was quite educational.

Pre-processing steps, like AI-based PCC helps ensure success in downstream processing and analysis. With all the alarmism about AI, it sure is refreshing to see such a great application of AI for a change.