[Editor’s note: this blog post is part one of two. Stay tuned for the conclusion early next week.]

The buzz (sorry couldn’t resist) around UAVs is undeniable, but if I learned anything from laser scanning it’s that you shouldn’t use the manufacturer’s tech sheet to quote your capabilities.

With UAVs, this is even more of an issue as there are so many more variables to contend with. While I often think of the UAV as little more than a flying tripod, the fact is that flight control and geo-referencing options can greatly affect the outcome of projects. Then, you have issues of what type of camera to use, and that’s all before you even consider the software you intend to use for processing. However, none of this seems to deter the plethora of UAV-based service providers that call and email me each and every week looking for work.

What it comes down to is this: I don’t believe most of their claims. I simply do not understand how they can achieve the accuracies that they claim to attain. So, I decided to start running a few tests to see what was not just possible, but predictably achievable in a project setting, outside of a lab.

Before we get into the testing, a few caveats. I have very little experience with aerial mapping and fewer than 10 completed mapping projects using UAVs. Given the increase in my knowledge and the ever-increasing capabilities of UAS I am sure that whatever the results, they are the worst I will reliably achieve in my career! However, I’m hardly alone in that camp. Many new users are entering this space, either as service providers, users of the data, and/or contractors of these services. Instead of dropping in here as if I’m the expert, I thought it might prove more useful to share the learning curve as I try to learn when and where UAVs are most useful.

Lastly, I am attempting to use UAVs for mapping. While I may use them for visualization or inspection at some point, that is not the focus of this series of tests. I’m far less interested in pretty pictures than I am in accurate pictures.

Testing Accuracy

As you might imagine, my first quest was to quantify accuracy and see what I can pull from the resulting data. To that end we set up a test. We had a section of road that was newly constructed and not yet open to the public. We were already contracted to map it conventionally, so our first test was to fly the area and see how accurately the UAV data matched the conventional survey. This would help us determine whether we would choose a UAV for this type of work in the future.

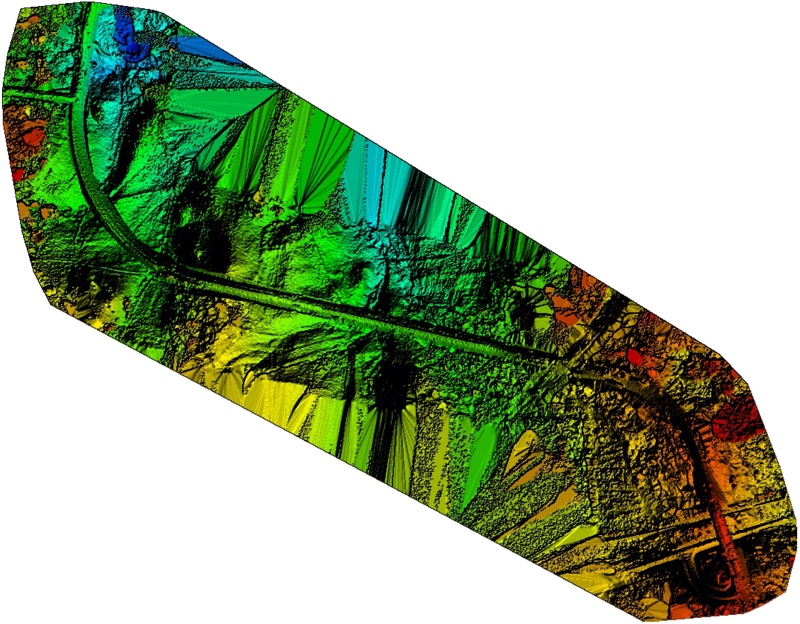

We also decided to laser scan a topsoil pile onsite so we could perform volumetric comparisons between the two systems. Lastly, we had access to a second UAV, and a much better camera, so we opted to cover the product pile with this unit as well for comparison.

Tech Details

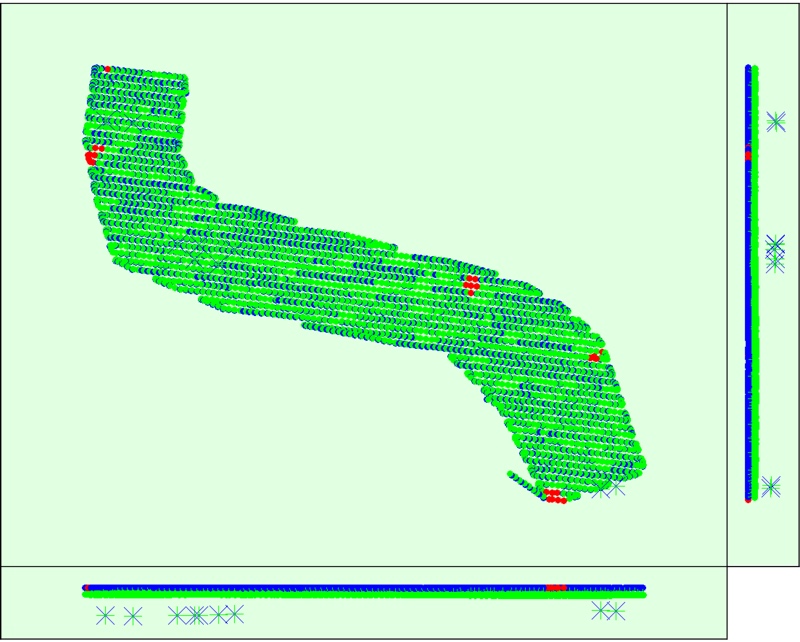

We started with what I will refer to as the “basic drone.” This was the DJI Phantom 3 Professional with the standard 12 megapixel camera. We chose a pre-planned flight in a standard grid, with 80% frontal overlap and 65% side overlap. These metrics were chosen as a 5% increase over the minimum specifications suggested by the processing software, Pix4D. This was all collected at an altitude of 240 ft (73 m) relative to the elevation at the Phantom’s take off position. In the end, we took 2,051 images to cover the 309-acre (1.25 km²) site.

For the product pile area, we also flew a Freefly Alta with a Canon 5D SR (53 megapixels) on a Freefly Mōvi gamble. As our control (scientific “control” – not survey “control”) for the product pile, we scanned it with a Leica ScanStation C10. That point cloud was georeferenced to the site survey control, as was the Phantom data. The survey control was setusing RTK to a DOT network per project specifications. The lack of accuracy in this was one of the reasons that we scanned the product pile for a more accurate comparison. I started to re-run the control for higher accuracy, but decided against it since this was to be a real-world project test–he budget would not warrant that on an actual project so with stuck with that mindset.

We processed using a combination of Pix4D and Leica Geosystems Cyclone. We did this because of the lack of a cleaning workflow in Pix4D data. I will state that I am self-taught on Pix4D, but I can’t seem to find a way to clean out vegetation and/or reduce the noise in surface data to my satisfaction. Heavy vegetation is thrown out of the data, but light vegetation as well as cars, people, tripods, etc. are all baked into the surface mesh. My work-around at this point is to export a point cloud from Pix4D so that I can clean and re-mesh it in Cyclone. I also used Cyclone for the volumetric calculations. However, the comparison to survey data for the overall project area was performed in AutoCAD Civil3D.

Data Collection

The flights went well, although it was a bit windy (steady 10-15 mph). This did not cause any visible problems with the data, but it did impact flight times by requiring more pit stops for fresh batteries compared with a calm day. The flights took place between 11am and 1pm to reduce shadows. Conditions were mostly cloudy. The DJI Phantom 3 performed as advertised along its pre-programmed flight path. The Freefly Alta was deployed in a free flight configuration, with a pilot navigating around the product pile while another technician operated the camera. Images were taken at stopping/hover points as determined by the camera operator.

Before we go too much into this system I’ll let you know that we did not get usable results. It is obviously superior tech, however, not having a location for the product pile prior to mobilization and trying the free flight method is most likely the source of our problem(s). My opinion is that we failed to maintain a consistent distance relative to the product pile, as we changed altitudes when circling the pile which caused some problems because pixels would have had wide variances in absolute size values between images within the dataset. Also, because the pile was relatively small, the onboard GPS positions all appeared to be very similar, which caused Pix4D to disregard the initial location data. I could not find a way to override this setting in the software. Either way, Pix4D did not like it at all. I am undertaking more trials toward using cameras to map 3D objects (as opposed to land forms) so I’ll get back to you if I find a way to salvage the Freefly data.

Check back next week as I’ll post up full results of the volumetric survey comparison between the C10 and the drone and cover the results in detail. However, I feel guilty bringing you this far without a preview at the very least!

Preliminary Results

Essentially, the results were comparable and for some clients they may suffice. However, in many ways they were nowhere near the accuracy levels so often quoted in the marketing materials we often receive from drone service providers. Like many collection systems, a UAS has a lot of interdependent components, all of which are capable of adding a bit of error to the overall product. Ferreting out which issues are causing me errors has lead me down a path where I’ve had to learn about things like lens aberration, rolling shutters, GSD to flight speed ratios, and that’s before you get back into traditional survey issues surrounding ground control and the like.

If there is one thing I want to leave you with at this point in the narrative, it’s that anyone operating a UAV for mapping needs to be familiar with all of these and know how to quantify their effect on his/her rig; otherwise I’d look elsewhere for a service provider.