Dense point clouds have their place. You want your point cloud to be dense, for instance, in applications where you can’t return to the site to take more measurements, or when you need to see every minute contour of a 3D space. There are also applications which would be better served by a leaner point cloud. That’s where Terabee comes in.

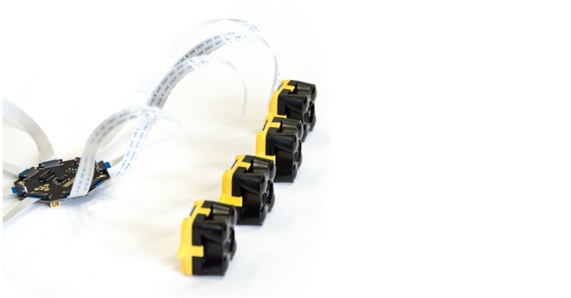

The company is known for its TeraRanger line of inexpensive single-pixel-distance lidar sensors for robot localization, mapping, SLAM, and automation. Now, with the TeraRanger Hub Evo, it’s taking a confident step into lean, “selective” 3D point clouds.

TeraRanger Hub Evo is a small, €99, octagonal PCB to which users can connect up to eight TeraRanger Evo lidar sensors in a custom configuration (these sensors use LEDs for ToF measurement, enabling them to have a “field of view,” or an area they measure instead of a single point). The hub powers the sensors, synchronizes the data, prevents crosstalk, and puts out a simple array of distance values in millimeters. Per Terabee, “in that way you can monitor and gather data from just the areas and axes you need and create custom point clouds, optimized to your application.” The suggested applications include, of course, robotics, automation, and even smart cities.

CEO Max Ruffo explains that the data generated by the Hub Evo sidesteps a number of challenges presented by denser point clouds.

“We understand that people often feel more secure by gathering millions of data points, but let’s not forget that every point gathered needs to be processed. This typically requires complex algorithms and lots of computing power, relying heavily on machine learning and AI as the system grows in complexity. And, as things become more complex, the computational demands of the system rise and the potential for hidden failure modes also increases.”

By way of example, Terabee recounts how it designed a custom lidar system for a mobile robot that wraps shipping pallets with film. The challenge was that robot needed to be able to process the virtually random shapes on the pallet, while still wrapping accurately, and moving at speeds up to 1.3 meters per second.

The solution used 16 sensors. The first array included three facing to the side to ensure correct distance from the pallet, and five facing forward for collision avoidance. The second array includes eight sensors which read the objects on the pallet. With the data from these sensors—a relatively lean stream of numbers compared to a dense point cloud—the robot can avoid hitting objects hanging off the side of the pallet, and optimize its path through space to wrap the pallet quickly, accurately, and safely.

Ruffo thinks that Terabee could go even further with this idea, reducing the number of sensors, and generating an even leaner, even lighter process, computationally speaking. He says that this multi-sensor concept has already proven popular as a way for users to prototype and use multiple sensors in applications from robotics to smart cities. That is, there may be uses for it that no one has thought of yet.

For more information, see Terabee’s website here.