Users of remote sensing tech love to discuss the tradeoffs of using 3D sensors or 2D sensors for the purpose at hand. In some cases, it can be hard to choose.

Ouster gained a lot attention earlier this year with the announcement of a few aggressively priced lidar sensors that promised to alleviate that difficulty. CEO and co-founder Angus Pacala told SPAR in March that its OS-1 lidar could use the “uniformity and quality of [its]measurements” to produce a rectilinear image that looks a lot like what you’d get from a camera–except gathered with an active sensor so it also works at night. As Ouster likes to put it, “the camera IS the lidar.”

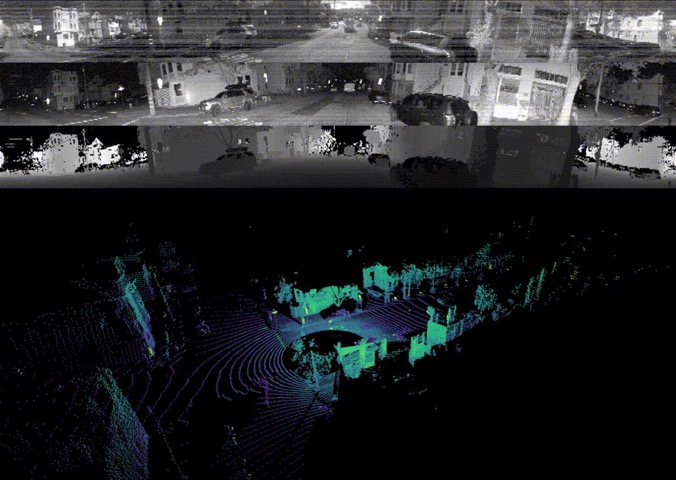

Last Friday, the company made good on this buy-one-get-one-free promise when it released a firmware upgrade that pushed the functionality to every OS-1 lidar. The system now outputs fixed-resolution depth images, signal-intensity images, and ambient images “in real time, all without a camera,” Ouster said. “The data layers are perfectly spatially correlated, with zero temporal mismatch or shutter effects, and have 16 bits per pixel and linear photo response.”

When SPAR caught up with Pacala for more information, he explained that the benefits of this technology are more extensive than it might seem at first. Some of the greatest implications, he argues, are in deep-learning applications.

2D/3D deep learning

Since each pixel of the OS-1’s lidar output is encoded with depth, signal, and ambient visual information, Pacala says that Ouster has been able to run the data though deep learning algorithms that were originally developed for cameras.

In one example video, Ouster shows what happens when they feed this rich 2D/3D data into an algorithm designed to analyze each pixel of a 2D image to recognize driveable roads, vehicles, pedestrians, and bicyclists. It works, and in real-time:

The per-pixel alignment of the data sets enables another cool trick. Once Ouster had the 2D masks as generated by the algorithm, it was trivial to map them back onto the 3D data “for additional real-time processing like bounding box estimation and tracking.”

Superpoint and deep learning

More exciting, says Pacala, is the results that Ouster achieved by feeding its 2D/3D hybrid data into a deep-learning network called SuperPoint.

First, some background. SuperPoint was developed and trained as a research project at Magic Leap. It finds what Pacala calls “key points,” which are “arbitrary locations of high contrast or texture or shape that can be identified reliably,” and then matches these points from one frame of a 2D video to the next. Here’s an animation of SuperPoint at work on a 2D video of a road whipping by an autonomous vehicle.

Source: SuperPoint on GitHub

Though networks like SuperPoint are very effective when you feed them 2D data, Pacala explains that there have been few attempts to use similar deep-learning networks to process 3D lidar data. (This would involve finding key points—shapes like edges or planes—and then tracking them across a sequence of point clouds.)

This lack of experimentation is why Ouster was surprised when they took one of their sensors’ data sets, separated it into its constituent data layers, and then fed those layers to the SuperPoint network independently. The 2D intensity and 3D depth data sets both produced usable results.

“Our demonstration is exciting,” says Pacala, “because it does the 2D-signal-intensity key point tracking well, and for the first time, we’re demonstrating you can do robust key point tracking with deep learning on the depth images directly. The deep learning algorithm is picking up on things like building edges and poles in amazingly robust ways.”

Why that matters

Tracking key points in a sequence of “images” is one of the “fundamental building blocks” for odometry algorithms, which process a sensor’s data to estimate its position and orientation in real time. By extension, these algorithms also estimate the position and orientation of whatever’s holding that sensor—whether that be a person, a UAV, or an autonomous vehicle. Notably, NASA used a 2D visual odometry algorithm on the Mars Exploration Rovers, but odometry algorithms can also be used to help position robots on earth, or UAVs, or AR headset. It almost goes without saying that odometry is important to SLAM.

As usual, there are tradeoffs when you’re picking a sensor for odometry. “Lidar odometry struggles in geometrically uniform environments like tunnels and highways,” says Pacala, “while visual [2D] odometry struggles with textureless and poorly lit environments.”

Ouster’s OS-1 offers the ability to perform 3D odometry AND visual odometry at the same time. That means it gives a system redundant data, enabling that system to use whichever method of odometry fits the current environmental conditions and offers the best results.

How is this better than using a lidar sensor and a camera separately? A multiple sensor set up introduces a new challenge: For good odometry, all sensors need to be tightly calibrated to produce data sets that match very closely in space and time. This is not a trivial task. Since Ouster is generating these different data layers with one sensor, rather than two or three, calibration becomes a non-issue. “That’s one of the really important aspects of our sensor,” Pacala says, “perfect spatial and temporal calibration between the data layers–out of the box”

In closing, Pacala told me he believes this demonstration suggests huge implications for SLAM. He adds that this is true not only for “self-driving cars, but any robot that needs to robustly navigate its environment.” He says that Ouster is already working with a number of customers in the drone, surveying, and delivery robot spaces.

For more information, see Ouster’s website to contact the company directly.