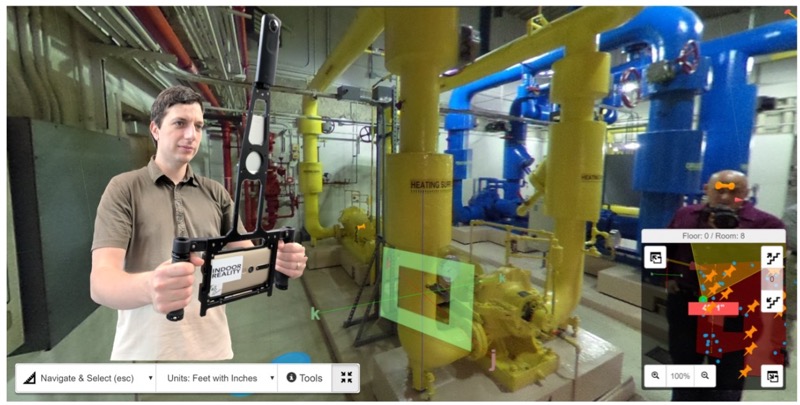

Indoor Reality is in the business of 3D mapping interiors and indoor assets. It’s wearable and handhold-able hardware is already in use in construction, real estate, architecture, insurance, and of course, facilities management.

The company first hit the market a few years ago with the IR1000, a 35-pound backpack that comes complete with lidar, cameras, and a complement of other sensors. Costing $150,000, this system enabled a user to scan 200,000 square feet a day. In 2017 the company released a handheld scanner called the IR500, which weighs only one pound, costs $3,000, and covers 60,000 square feet a day.

According to Avideh Zakhor, CEO and founder of Indoor Reality, the company’s future will include even more hardware, designed to meet the needs of an even wider user base. However, as I learned during a conversation with her last week, the company has also paired all of these mobile hardware solutions with a software platform that makes for an especially easy user experience. It offers a low-friction workflow, auto-generated deliverables, and an unexpectedly cutting-edge set of features.

Scan to data, quickly and automatically

The concept of operation is easy to understand. “You walk around inside a building,” Zakhor explains. “When you’re done, get to a place that has WiFi connectivity, upload it to our cloud where we do a bunch of number crunching, and then we automatically create data products that any user can access by logging into a web account.”

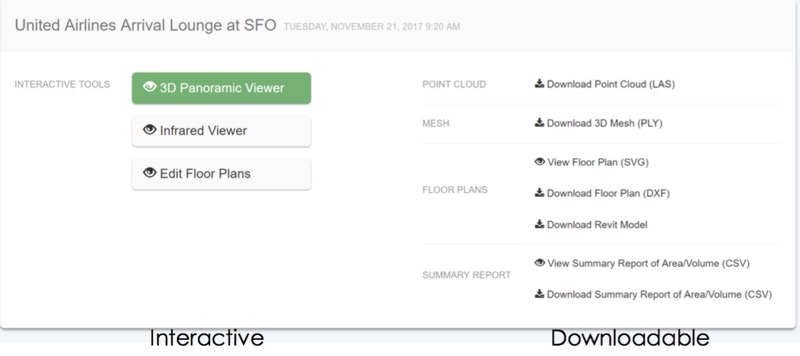

The Indoor Reality dashboard.

Throughout our talk, Zakhor returned to the issues of speed and automation. The capture step is much quicker than static scanning—Zakhor estimates that it takes 1/10 as long to capture any given space with Indoor Reality’s hardware as compared to traditional lidar. The cloud processing, which generates the data products, is much faster than processing on your desktop, and saves extra time since you can complete other work while it computes in the background.

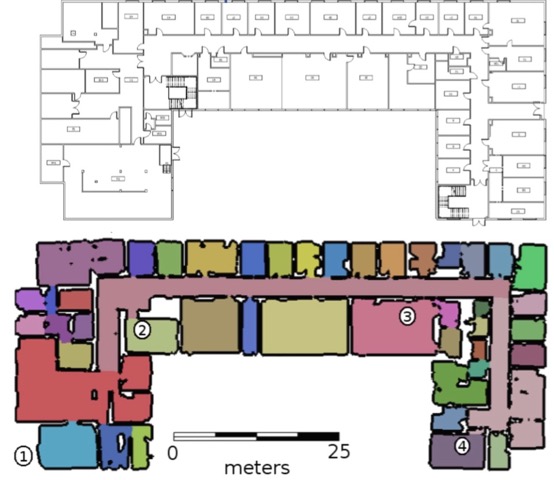

After processing is complete, users can open a simple dashboard to access downloadable files, including point clouds, 3D meshes, auto-generated floor plans, and even a summary report that includes figures for floor area, total surface area, room volume, total volume, and so on.

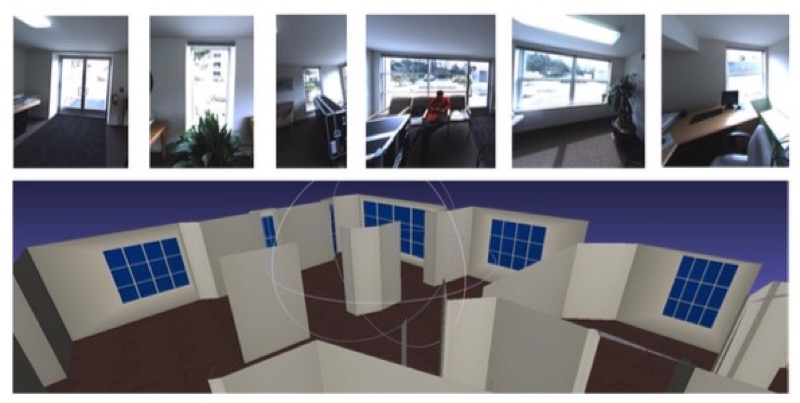

The 360° viewer

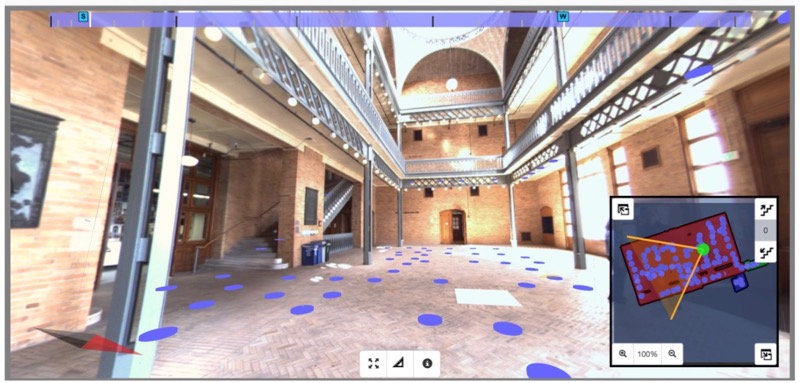

Interactive 3D data

More important, says Zakhor, is that the system produces a number of interactive data files, like a 3D panoramic viewer. Open this up in your browser (or using a VR headset), and you can virtually navigate a space or take measurements. “There are six or seven different modes of measurement,” says Zakhor. “You can measure along your axis of interest—along the vertical axis, along the major or minor axis of the room, or use snap measurement to measure the distance between two walls.”

Essentially, the user selects the right measurement mode, and the system constrains the measurements to that axis—making sure you don’t measure diagonally out from the wall, for instance, when you mean to measure the width of a window. These kinds of touches exemplify the user experience of the software platform.

While you’re in the 360° viewer, you can leave notes for other users by pinning photos and even audio files to specific assets. Hit the share button, and the system emails a list of the annotations to any user you select. This makes it easy for collaborators to exchange information, and have “a running conversation about a particular issue.”

Floor plans and Revit models, with no point cloud tracing

In our conversation, Zakhor emphasized the speed of her system, and the degree to which it takes humans out of the loop. The floor-plan function takes these ideas even further.

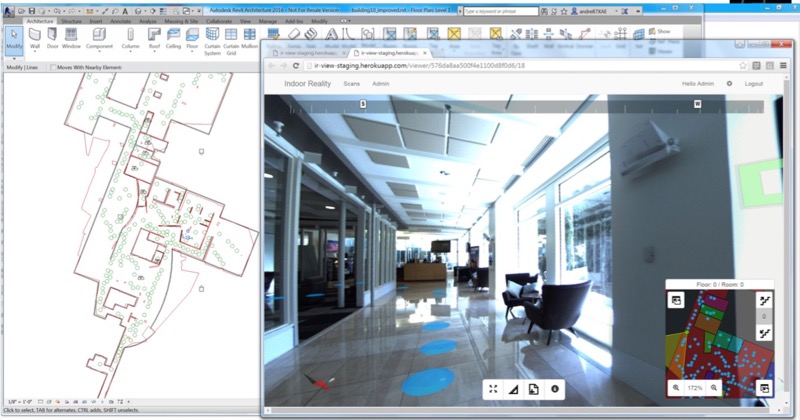

Users with simpler needs can open the automatically generated 2D floor and make simple corrections for finalization, for instance adding a wall, or combining two rooms into one. For users with more exacting needs, a single button-press in the Indoor Reality dashboard downloads all the necessary pieces for making a quick Revit model: the auto-generated floor plan, the panoramic photographs, and a point cloud. Open these in the Revit Plugin (below), and you’re good to go.

The Revit plugin

“Because we generate the floor plan automatically, we don’t have to trace point clouds,” Zakhor says. “It already gets the approximate location of the walls, so it has only to be refined.” Users do this by clicking on green dots in the floor plan to examine the panoramic imagery for the area they are working on modeling. “So what happens is you can view the web viewer, for example, to look at that door to see the door swing, and come back to the Revit model and fix it up. Or you can distinguish whether that’s a stack of boxes going to the ceiling, or whether it’s an actual column and come back to the Revit model and fix it up.”

All told, Zakhor says this two step process of taking the auto-traced floor plan and refining it has proven to be three to five times faster than tracing each and every asset/feature by hand. She also argues that it has helped “democratize the 3D reality capture for architects, engineers, general contractors, or anyone who wants to rapidly create digital twins of indoor spaces during remodeling or restoration projects.”

A project, end to end

A project, end to end

She shows me a data set for the United Airlines lounge in SFO, which Indoor Reality captured, and explains the project time line from start to end. “It took us 30 minutes to walk every single square foot, the cloud processing time to generate all of those products automatically was one hour, and then the human intervention time to generate that fancy Revit model was five hours.” End-to-end in six and a half hours.

A quick list of even more features

Making the basic workflow simple to understand, and removing the human from the loop for a great portion of the processing is impressive enough, but Indoor Reality has filled out the software with nearly every cutting-edge tech you can think of, and a few more you probably didn’t expect.

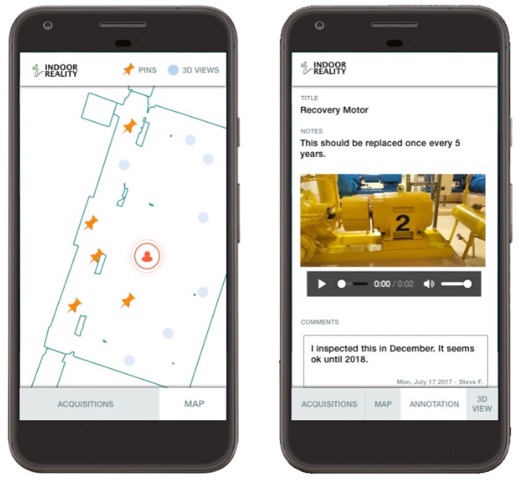

In-field asset tagging, machine learning

Indoor Reality’s tablet system allows users to tap a screen during the acquisition and leave notes. If you’re a facilities manager, for instance, you can tag the important pieces of equipment as you capture. A user viewing the data on the other end will see a thumbtack where you’ve tagged, and can open up a higher-res picture of the asset. They can look at the serial number of the equipment to check its maintenance schedule, or see if it needs replacement soon. This feature can also be used in construction for daily visual punch-listing and documentation, as well as closeout documentation and handover.

Machine learning recognizes objects like windows.

Second, the system will one day incorporate machine-learning algorithms that can be trained to find objects of interest, whether they be doors, windows, boilers, or computers. When this is out, the human can take one step even farther back.

Multiple acquisitions

For larger spaces that require multiple capture sessions—or more than one user capturing at a time—the system has been designed to automatically align different acquisitions. Users viewing the data will see a seamless point cloud, as well as a seamless 360° view on the web.

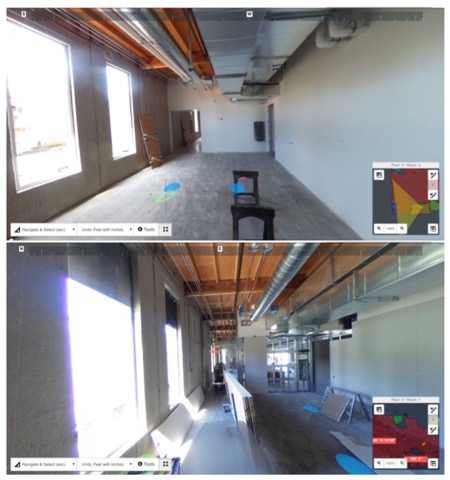

Time lapse

Indoor Reality’s automatic alignment comes in handy for another application: viewing change over time. Zakhor opens up the web viewer and looks at the data for July 15th. In the viewer, I see an unfinished wall and lots of exposed pipework and wires. She hits the time travel button and pulls up data in the same location for July 19th.

Even though the operator walked in a different path on each day, the system aligns the two data sets automatically. “Now I can see we are in the same spot, looking at the same wall,” Zakhor says. ”All the pipes are invisible, there are sinks, there are file cabinets, there are refrigerators. This clearly shows the progress made.” This can be used, for instance, for more accurate billing in construction.

Indoor wayfinding

As you’re scanning a building, the software is working in the background to fingerprint the building, and build location databases. Later, these can be fed into Indoor Reality’s mobile app, which runs on consumer mobile phones to offer indoor way finding, which technicians can use to find their way to a specific asset without any assistance.

GIS integration

Surprisingly, Indoor Reality data also integrates with ArcGIS. If you load one of the data sets into ESRI’s software, you can navigate to a building, explore the inside using the Indoor Reality panoramic viewer, and then easily exit back into your larger data set in ArcGIS.

What’s next

Indoor wayfinding with the Indoor Reality app.

In the future, Zakhor tells me that she envisions an indoor asset capturing system as being even simpler: the user walks the space with their capture device, and the system automatically feeds back a huge amount of actionable information.

Zakhor elaborates: “We can keep track of the maintenance schedule, of all the equipment they have, we can be reminding them: In two months your boiler needs to be repaired, your pump has to be replaced. We will have a fantastic inventory of all the equipment on our customer’s site, and we can apply big data and machine learning algorithms to further improve the system. We can say that boilers from this manufacturer have an average lifetime of x because we already dealt with 50 customers who used that boiler.”

This bears out the value of Indoor Reality’s mission to make capture as affordable, as quick, and easy as possible. “Eventually,” she says in conclusion, “the data and the content gathered become quite valuable in terms of analytics and predictions of how owners need to operate and maintain their buildings.”