As part of a series of announcements at WWDC 2021 earlier this week, Apple debuted several new APIs, developer kits and other applications. But the one that caught our eye was Object Capture.

Apple already has the world’s largest augmented reality platform - they claim there are more than a billion AR-enabled devices that are powered by ARKit, their development framework that facilities the creation of AR experiences. Its complimentary RealityKit provides the animation, rendering and physics support for AR development.

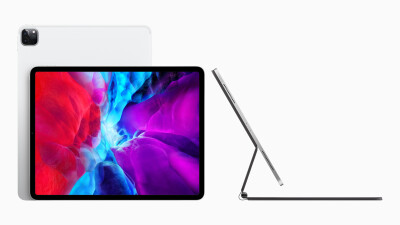

RealityKit 2, part of the announcements, introduced us to Object Capture, an API for macOS Monterey that allows for detailed 3D models to be created from photos shot on the iPhone, iPad or other cameras.

Rather than manually creating 3D models for AR applications, Object Capture uses photogrammetry to turn a series of 2D images into photorealistic 3D objects. In a demonstration during the announcement, a user takes a few pictures standing around an object and then imports the 3D model into Cinema4D, a modeling and testing environment for gaming, entertainment and AR applications.

In their newsroom blog, Apple says that companies like Wayfair and Etsy are utilizing the tech behind Object Capture to create product models in 3D more quickly, saving them time and getting to a usable 3D object more quickly than other workflows. Game developers like Maxon and Unity are also cited as developers working with Object Capture to create cinematic and game experiences.

What is not clear from what has been presented, however, is whether Object Capture’s API is utilizing the lidar capabilities of some iOS devices. The reason given for adding the lidar to these devices in the first place was to enhance AR applications, so its lack of mention here is perplexing. The API is also touted as being for any device running MacOS Monterey (which will be available this fall) and does not necessarily limit its use to the iPhones/iPads).

While some companies like Matterport have already used the lidar capabilities to enhance their own mobile-device-based scanning ambitions or develop their own apps (like SiteScape), Apple seemed, at first to want to keep the conversation about AR, letting other developers make third-party applications that could be used for 3D scanning. By making their own 3D scanning capability, there’s a potential that Apple’s Object Capture might shut out some of those 3rd party apps that have just gotten off the ground.

For AR developers, however, this is a big step forward in better usability and integration of their workflows. Combined with tools from Unity and Maxar, it will be interesting to see what people use this new on-board scanning capacity to create.