Augmented reality (AR) is a technology that superimposes a computer-generated image on a user’s view of the real world, providing a composite view. While the technology first gained public attention with the Pokémon Go game, augmented reality is still waiting for its big breakthrough moment. Leading tech companies such as Apple, Google, and Microsoft are very active in this space, but there are still a lot of nuts to crack before AR technology becomes a commodity.

The biggest challenge is creating the infrastructure that underpins all AR applications: the 3D models of indoor and outdoor spaces that a mobile device uses as a reference to create an AR user experience. ARKit and ARCore, the technologies from Apple and Google, respectively, attempt to create these models on the fly, and exclusively on the device itself.

6D.ai CEO Matt Miesnieks, however, quickly demonstrates why a truly engaging AR experience on a mobile device requires off-device data infrastructure. If all the infrastructure is stored on the device itself, he asks, “how do other people on other types of AR devices join us & communicate with us in AR? How do our apps work in areas bigger than our living room? How do our apps understand & interact with the world? How can we leave content for other people to find & use? To deliver these capabilities we need cloud-based software infrastructure for AR.”

Miesnieks’ company has named this infrastructure the “AR Cloud,” which it describes as “a machine-readable 1:1 scale model of the real world. Our AR devices are the real-time interface to this parallel virtual world which is perfectly overlaid onto the physical world.”

Miesnieks’ company has named this infrastructure the “AR Cloud,” which it describes as “a machine-readable 1:1 scale model of the real world. Our AR devices are the real-time interface to this parallel virtual world which is perfectly overlaid onto the physical world.”

The term AR Cloud first came about in a blog post written by industry expert Ori Inbar in September 2017 that describes how both startups and leading tech companies can establish a foundation for future AR applications. In April 2018, Inbar noticed that the term had stuck, and that investments, community building and events around the AR Cloud had taken off, with many startups eager to tackle issues that come with developing this infrastructure.

6D.ai

6D.ai, a leader among these startups, emerged from Oxford University’s Active Vision Lab. The company name refers to the six degrees of freedom of a rigid body in a 3D space (forward/back, up/down, left/right, yaw, pitch, roll). The second part of the name, AI, refers to future AI neural-networks that will help developers’ applications understand the world in 3D, using the 3D data captured with the API.

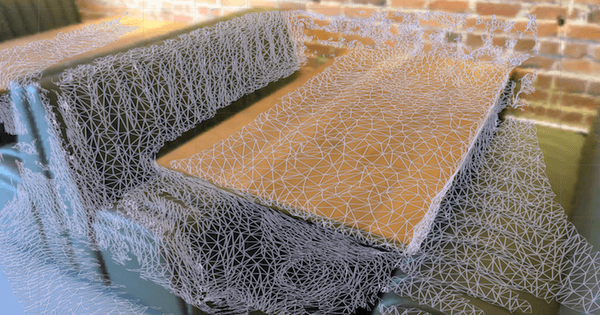

The company offers developers a 6D.ai API that uses a standard built-in smartphone camera to build a real time, three-dimensional semantic map of the world. Most data processing happens on-device in real-time, while cloud capabilities allow the device to contribute to a worldwide, crowd-sourced 3D model, as well as read world-scale data from that same model.

The current beta program, rolled out in April of this year, only supports the iPhone X and 8. In a blog post, Miesniks explains why he chose Apple’s AR platform over Google’s at the time. For the general public launch of the SDK, support will be expanded for all ARKit and ARCore devices.

The API offers a number of powerful features required for a smooth AR user experience: persistence, multiplayer, occlusion, and physics.

- Persistence means that AR objects stay in the real world where you’ve left them. In other words, it offers a save function that allows users to move virtual objects around in a space (for example, virtually decorating a new house) and then uses the database to store their location in their real world until the next session.

- Multiplayer means that multiple users have a single worldview: all users have the exact same real-time AR representation, without complicated calibration or synching procedures currently making for a bad AR user experience.

- Occlusion refers to a smooth blending between AR and the real world, namely when physical object block the user’s view of virtual objects.

- Physics refers to the interaction of virtual assets with the real world, such as balls bouncing on a floor of rolling from a table.

The SDK is currently in beta, though 6D.ai opened the beta in mid-October.

A recent example of an app that uses the company’s SDK comes from Laan Labs, a development group focused on audio and visual applications. Last September, they demoed a 3D scanning app online. Using the 3D scanning app, users can simply scan objects by moving around the target. Next, the app generates highly detailed models from the data, which users can share with each other.