By this point, you probably know a thing or two about DARPA. It is the government arm on the forefront of developing technologies like solid-state LiDAR and war gear like robot exoskeletons. Their latest venture is a doozy: A system that develops real-time virtual reality from two camera feeds.

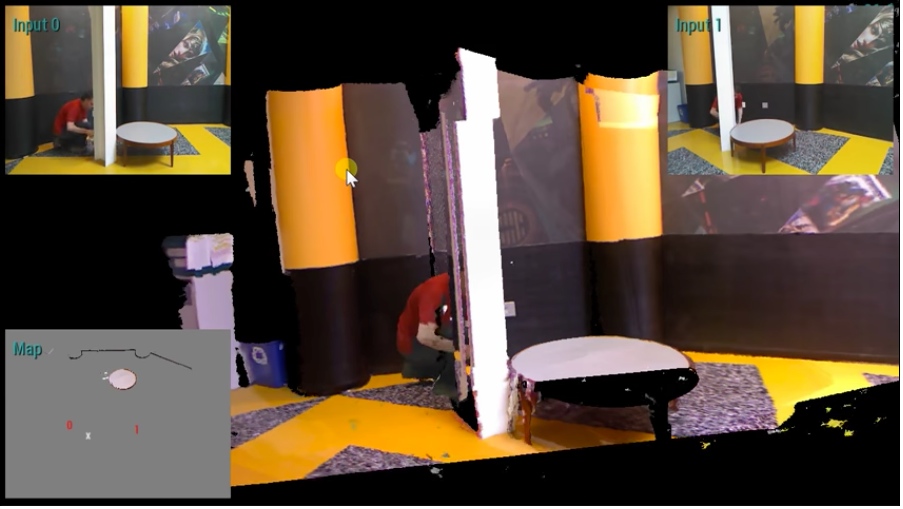

The “Virtual Eye” system uses 3D imaging software, NVIDIA graphical processing units, and any two cameras to generate a detailed, immersive view of potentially dangerous environments. It is intended to allow soldiers, firefighters, and search and rescue workers “walk around a room or other enclosed area — virtually — before entering, enabling them to scope out the situation while avoiding potential dangers.”

“The question for us is, can we do more with the information we have?” says Trung Tran, the program manager leading Virtual Eye’s development for DARPA’s Microsystems technology office. “Can we extract more information from the cameras we’re using today?”

How it Works

Workers or soldiers simply take two cameras of any kind (the system is “camera agnostic”) and send them into the difficult room on drones or robots, which will “wield or position” the cameras in two separate locations.

Here’s the amazing part: Virtual Eye’s software “fuses the separate images into a 3D virtual reality view in real time by extrapolating the data needed to fill in any blanks.” Not bad for a system running off of commercially available graphics cards.

How does it fuse the information? Tran explains that it creates “a 3D image by looking at the differences between two images, understanding the differences and fusing them together.” Whatever that means–I don’t think we should expect a particularly in-depth explanation to be forthcoming from DARPA.

What it can Do

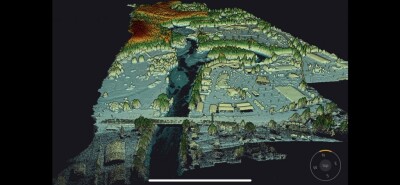

A system that fills in the blanks in its vision when creating a 3D VR experience is not ideal for any application that requires accurate measurements. However, real-time VR generation could add another dimension to remote collaboration. If, in addition to viewing models, and photographs, and videos, we were able to offer someone a VR feed generated by two drones flying through a site, that could be a very powerful communication tool.

What’s more, the technology is going to start coordinating images from more than one camera. By early next year, Tran says that he will demo a version of his software that coordinates up to five cameras.

Beyond the benefits that this will have for remote collaboration, the obvious application is sports. Tran says that the system may some day enable the 3D broadcasting of sports with a minimal number of cameras. Ever wanted to watch a football game on your Oculus Rift? Your day is coming.