URC Ventures’ Stockpile Reports is notable for a number of reasons, but perhaps chief among those reasons is offering a smartphone app for performing accurate in-field measurement of stockpiles and coupling it with a full-featured systems for managing and auditing those stockpiles over time. It’s the rare mobile 3D technology that has successfully been ushered from a “what if?” to a usable enterprise application.

With the release of Structure Reports, the company is set to do it again. CEO David Boardman explains Structure Reports as an iPhone framework and web services backend that combines the sensor data from Apple’s newly available ARKit with URC Ventures’ existing 3D reconstruction technology. The product–and Boardman warns me that its name may change in the future–will enable other companies to develop applications that model a building in 3D using nothing more than an iPhone.

If you’ve followed the smartphone with interest over the past few years (and who hasn’t?), you may wonder why it took the ARKit to make this possible, especially for a company so deeply involved with computer vision technologies.

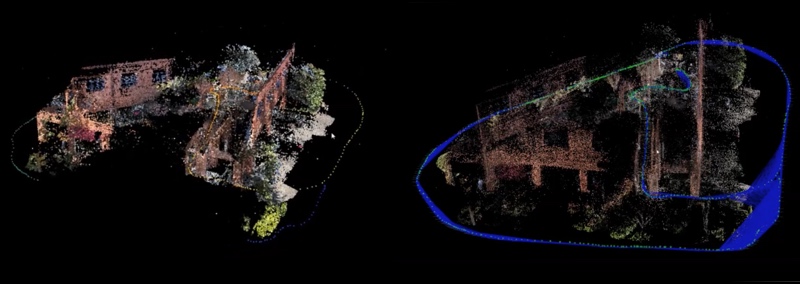

“Historical attempts to build similar solutions,” says URC, “have not made it to the market because of failures in 3D reconstruction algorithms to handle extreme camera rotations, textureless surfaces, and repetitive structures.” In other words–existing algorithms couldn’t handle the kind of movement that users of a handheld device would throw them.

There were other problems, as well. “An untrained user taking video of an indoor scene will typically collect video with many blurry frames caused by fast camera rotations and the long image exposure times needed to handle weak indoor lighting,” says chief scientist Dr. Jared Heinly. “These blurry frames will cause breaks in vision-only 3D reconstruction pipelines.”

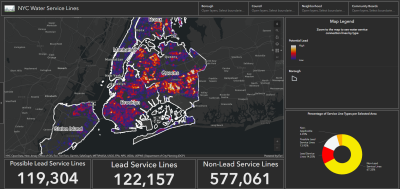

Left: Typical faulty reconstruction w/o ARKit corrections, Right: Reconstruction w/ ARKit pose corrections

By using sensor data grabbed from Apple’s new ARKit, the URC Ventures processing pipeline can now handle your shaky hands and poor videography skills. But how does it work? The ARKit uses visual-inertial odometry, which The Verge helpfully explains like this: ARKit pulls data from the iPhone’s camera and motion sensors to identify points in the environment and track them as you move the device.

“By fusing inertial navigation based pose estimates into our solution,” says URC’s Dr. Heinly, putting the discussion back in the realm of the technical, “we create complete 3D models even in these challenging circumstances.”

There are other benefits of using the ARKit. URC says Structure Reports can offer absolute-scale measurements without control points, and it significantly reduces drift in the results. That means it can “estimate the volume of objects even more accurately,” says URC’s scientific advisor Dr. Jan-Michael Frahm.

CEO David Boardman ties it all up with a neat bow: “It is now possible to have an average person with limited training walk in and around building structures to successfully model its structure.” He says URC is investing in developing this use-case, and is looking for partners to bring the solution to market.

For a demonstration, see below.