.jpg) Laser scanning has been the focus of much of our research to date. But terrestrial laser scanners are just one kind of sensor for capturing existing-conditions data. Ground-penetrating radar, airborne LIDAR, close- and long-range photogrammetry, digital photography, GPS, RTK,

Laser scanning has been the focus of much of our research to date. But terrestrial laser scanners are just one kind of sensor for capturing existing-conditions data. Ground-penetrating radar, airborne LIDAR, close- and long-range photogrammetry, digital photography, GPS, RTK,

laser bathymetry, multi-beam sonar, infrared and multi-spectral sensing, even electromagnetic and RFID all offer valuable, in many cases unique, information to support engineering, construction, operation and management of physical assets. We think multi-sensor integration – long a subject of intense investigation by defense and robotics researchers – is a competence that savvy surveyors, contractors and some owners are already working to acquire.Multiple sensors – what’s the need?

Why the need for data from more than one kind of sensor? Maybe the simplest reason is that with just one type of device, “it can be difficult or even impossible to collect measurements on all surfaces of a structure,” Albert Iavarone, product manager in Optech’s Laser Imaging Division, told attendees at SPAR 2004. (While far from being the only solution provider aware of multi-sensor integration, Optech manufactures a breadth of sensor products that give it considerable firsthand knowledge of the range of data possible.) Because laser scanners work on line-of-sight principles, laser shadowing can be a significant problem. For example, terrestrial laser scanning readily captures the sides of a building but not the top, while airborne LIDAR captures the tops of buildings but little if any data about their sides. “Since LIDAR sensors are available on several different platforms, sensor fusion can provide the means to generate complete, accurate models,” Iavarone says. “Airborne laser scanners collect accurate geo-referenced topographic data of large areas very quickly, while tripod-mounted laser scanners generate very dense, geometrically accurate data. Used in tandem, these scanners make it possible to generate complete models that are geometrically accurate on all surfaces.”

Data integration challenges and solutions

Of course the question is how to accurately, efficiently integrate data from different kinds of sensor. To combine airborne and terrestrial LIDAR data, Iavarone describes two options: geo-referencing and common-feature alignment. Geo-referencing data from a single type of sensor is not always straightforward, given the inherent difficulties of establishing – and agreeing on – dimensional control in a capital project. When two different kinds of sensor are used, the difficulty is compounded by the likelihood that the datasets will have different positional accuracies. For example, with airborne LIDAR data gathered using kinematic GPS for

positioning, “vertical accuracy is typically on the order of 15cm,” according to Iavarone. However, “when you geo-reference tripod-mounted laser scan data, you’ll do that with either static GPS or typical surveying techniques, and you’ll end up with accuracy on the order of

1cm in most cases. That being said, you may have a gap between the airborne and tripod-mounted data.”

One way to get around this, Iavarone reports, is “by using the common-feature method of alignment,” a capability of point-cloud management software such as InnovMetric’s PolyWorks, which Optech offers with ILRIS-3D. In one example Iavarone cited, a dam was scanned with a

tripod-mounted laser scanner, and the scan data was aligned to an airborne LIDAR dataset. “As long as you have some common overlap between the airborne and tripod-mounted data sets, you can identify common features, then do an automatic feature-based alignment.” “The benefit of this is that you don’t have to geo-reference your tripod-mounted

laser data,” Iavarone continues. “If you snap to the airborne data, you’re in essence going to be geo-referencing that data, because you’re bringing the tripod data into a real-world coordinate system.”

On the other hand, given that the GPS/INS-based positioning of airborne LIDAR can yield positional accuracies in the range of 15cm, “you could be degrading the accuracy of the tripod-mounted laser data set.” By comparison, “when you geo-reference tripod-mounted laser data, you’ll get accuracies on the order of 1cm. You can use this as a control surface. We have clients that will use ILRIS-3D to scan a runway, a parking lot or something similar. They now have a control surface that’s much more accurate than what they can get from airborne data, so they

can register and correct the airborne data to the tripod-mounted laser data set.”

What’s the value of this? Firms using airborne LIDAR for very large-scale surveys such as “a city or 150 miles of highway corridor can now use a tripod-mounted laser scanner to scan bridges, overpasses and other critical areas. They’ll get resolution beyond what they can get with the

airborne laser,” as well as more complete coverage by avoiding shadows and gaps in coverage. “It’s a differentiating feature in what they can provide.”Mapping multi-spectral imagery to laser scan data

Applying multi-spectral imagery – textures, RGB data, infrared data, thermal characteristics – to 3D scan data is a pragmatic example of multi-sensor integration. This can make laser scan images much easier to interpret visually, as well as adding more layers of analytic data to

work with. [For example, see Laser Scanning Cuts Survey Time by 2/3 in San Diego Airport Project for Riegl laser-scan images with true color.]

A widely applicable example is texture-mapping to a solid model or a point cloud. To do this, “there are two options,” according to Iavarone. “You either add the texture characteristics directly to the point cloud, or you add the texture characteristics to a processed solid model.” Software products such as Optech’s TexCapture are available to aid this process. “In the case of mapping data to the point cloud, you can do this automatically” if the laser scanner has a digital camera attached or integrated, he reports. “The beauty of this method is the fact that, as long as you can control the relationship between the camera and the laser optical center, and providing you can calibrate both systems, you can pretty much [map textures to point clouds]automatically.”

With this method, one restriction to be aware of is that “you’re limited by the resolution of the laser data,” according to Iavarone. “If the laser data set is higher-resolution than the photo, that’s great – you’ll maintain all your texture resolution. But if you take a low-resolution scan and apply a high-resolution image to it, you’ll lower the resolution of the image.” Most texture-mapping software will perform “a point-to-point mapping, and in the case where you have multiple image points between your data measurements, they’re going to be lost.”

“Texture mapping to a solid model sort of corrects that problem,” he continues. “However, it’s not automatic. You have to take your point cloud, generate a solid model, then apply your texture. So it does take some work.” One advantage, though, is that “you can map multiple images to a single triangle.” This is important because in a TIN model created from a point cloud, “the triangles are very large.” A single triangle may have 1000 to 5000 texturing characteristics mapped to it. “The beauty of that is we can generate solid models that are very low-resolution – 50,000 to 100,000 triangles – and apply high-resolution textures.” A further advantage of this method, according to Iavarone, is that the resulting model is “very light to carry, something you can bring into SolidWorks or a like program and use effectively, and it still has the appearance of being very high-resolution.”Merging laser scans, digital photos, spectral data for geologic analysis and oil exploration

Multi-sensor integration is a focus of research at the University of Texas at Austin, where researchers are integrating point clouds, digital photographs and other sensor data to develop better techniques for geologic analysis. Geologist Jerome Bellian, a research scientist

associate at theBureau of Economic Geologyin the Jackson School of Geosciences, has integrated airborne and tripod-mounted LIDAR with digital photography to better understand geologic features preserved within the rock record.

According to Bellian, “Our objective is to understand the (rock) geometries we can directly observe in outcrops, and project that knowledge onto what we cannot see in the subsurface. This helps us better understand how to predict complex reservoir systems in three dimensions, especially in areas with sparse subsurface data. Laser scanning helps us put the data in correct geometrical relationship, which is critical.” The group has owned an ILRIS-3D scanner since January 2002 to capture data in North America, South America, Asia, the Iberian Peninsula, the Arabian Peninsula and the British Isles.

Bellian works in the Reservoir Characterization Research Laboratory (RCRL) for mixed siliciclastic and carbonate studies, an industrial research consortium run by Dr. Charles Kerans and Jerry Lucia at the Bureau of Economic Geology and supported by major oil exploration and production companies, and by government grants. RCRL funded the ILRIS-3D purchase, which Bellian says made UT Austin the first university in the world to integrate Optech’s airborne and ground-based LIDAR for geological applications.

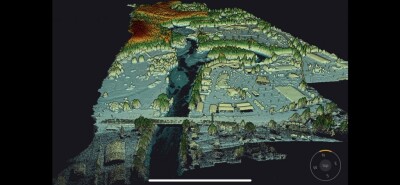

The RCRL recently constructed a 3D reservoir model from an outcrop in Victorio Canyon, TX. “Integration of the 3D laser data with rock-physics data enabled us to construct a synthetic seismic model of this outcrop to simulate what this canyon might look like to an exploration geoscientist in the subsurface,” reports Bellian. “Being able to have the answer exposed for you and capture the rock-type distributions with LIDAR saves hundreds of hours and increases our ability to understand complex geology quantitatively. It doesn’t just save time and money – it really offers a better product at the end of the day.” The color portion of the image was scanned from a Pentax medium-format print, then digitally draped over the 3D point cloud. The result is a dimensionally accurate 3D photograph that geologists can use to quantitatively interrogate the

ordered layering of strata. [More in a future SparView.]Hydrographic survey combined with laser scanning to document lock-and-dam facility

Another example is fromArc Surveying & Mapping, Inc., a hydrographic and topographic survey firm headquartered in Jacksonville, FL. In a project to document existing conditions at a lock-and-dam facility, the firm conducted a hydrographic swath survey to create a detailed 3D

representation of the basin bottom. Above the water surface, laser scanning was used to provide an as-built record of the structure. The two data sets were then merged to create a unified digital record of the facility both above and below the waterline. [More in a future SparView.]

Futures

What trends does Optech’s Iavarone see ahead? “Architecture is one of the main areas where I think RBG is going to become absolutely essential in any LIDAR data set,” he says. With airborne LIDAR, “we’re seeing not just RBG but infrared and multi-spectral imagery becoming a

standard – it’s becoming a requirement that the service provider deliver not just an elevation model, but one that includes these other types of data as well.” For the process and power industries, he expects thermal and other multi-spectral imagery will increasingly be captured and integrated with laser scan data. “That’s already the direction in the airborne market,” he reports, “and I have a feeling the tripod-mounted laser market will probably eventually follow in that same path.”

Indeed, multi-sensor integration “will be much more than just a visual aid,” Iavarone believes. “Algorithms will be developed that can use all these classes of data in parallel – for example, normalizing intensities across multiple ranges and angles, and using this data for classification purposes.” Such classification algorithms could be used to determine that a group of points with a given intensity and range represents vegetation, for example, while another set of values represents asphalt, “all for purposes of automated or semi-automated data processing.”

Today, “most image processing techniques are mutually exclusive,” Iavarone observes. “You have a matrix of possibilities in analyzing the 3D point cloud, and another matrix of possibilities with each additional kind of sensor data. Imagine the benefit in multiplying those matrices – that’s what future developments in analytic software will bring, when it becomes possible to analyze many kinds of data simultaneously.”