SPAR Japan keynoter looks at data capture’s role in moving 3D to 4D

KAWASAKI, Japan – The goals of Hokkaido University’s Division of Systems Science and Informatics are lofty: “Specifically, it is an academic field in which elemental technologies in such disciplines as information, mechanics, electrical engineering and electronics are combined to design integrated systems.” In Masahiko Onosato’s part of that division, called Digital Systems and Environments, they are working to continuously capture reality and make it digital, bringing terrain, objects, even animals and people, into what he called the Cyber Field during his keynote address here at SPAR Japan.

Essentially, he said, it is not enough to document our environment once, but rather it needs to be done almost continuously for the true benefits of such an action to be realized. There needs to be a feedback engine, whereby data is continually fed into the system and the system is then spitting back out information that can be used to make intelligent decisions.

This is perhaps the true promise, he indicated, of augmented reality. As the user experiences the world, information is provided to enhance that experience while, at the same time, more information is being collected.

Of course, crucial to this is the integration of various kinds of collected data, including that collected by mobile scanning systems, terrestrial laser scanners, airborne lidar, photogrammetry – anything that gets us closer to capturing the world and making it digital as it really is.

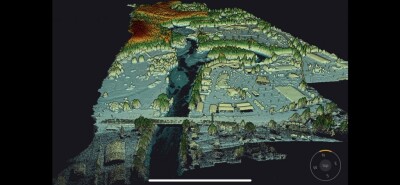

How can a task this monumental be accomplished? Onosato pointed out that it is already being done, though those doing it might not even realize. He referenced, for example, the University of Washington’s Building Rome in a Day project, whereby researchers merely searched “Rome” on Flickr, and produced more than two million photographs. Using a sample of those – some 2,106 images – they were able to use photogrammetry software to produce point clouds like the following:

How can this sort of crowd-sourced information be leveraged? Researchers are still working on it, obviously.

One initiative undertaken by Onosato and his department is the InfoBalloon, a balloon that can be tethered in place over city centers with any number of data collection instruments in place, including everything from laser scanners to weather collection devices.

He noted that had similar devices been in place over cities, for example, that have recently experienced earthquake devastation, perhaps similarities could be identified, so that search and rescue operations could be better organized and coordinated and survivors found more quickly.

Further, by collecting data of the destruction, and having data already on hand about how the buildings were built in the first place, simulations could be performed predicting both which buildings would likely first be affected and how they would collapse, helping to identify pockets where survivors might be trapped.

This 4D modeling, he said, was crucial to moving forward with his research, but it is incredibly processor and storage intensive. Continuous collection and display of xyz data consumes petabytes of data in rapid fashion. Still, he showed some very interesting video of a salmon scanned and swimming in real time, which could then be interacted with in real time with CAD-generated objects. What kind of applications for this kind of simulation could be created?

As research continues into this population of the cyber field with more and more data from the field of reality, important applications will emerge, Onosato confidently predicted.