Since its inception in 2014, millions of user-generated images were uploaded to the Mapillary platform. Computer Vision Engineer Lorenzo Porzi explains how Mapillary’s platform reconstructs and geo-positions single objects found on these images inside 3D models, with the aid of semantic segmentation, Structure from Motion and deep learning.

Mapillary is a mapping platform that uses crowd-sourced smartphone imagery to improve inaccurate existing maps, or create new ones altogether. Anyone can use their smartphone app to upload imagery that is processed in the cloud, resulting in a browser-based street-view imagery service comparable to that of Google. Since its inception in 2014, many companies and individuals use Mapillary’s platform for their daily operations. The street imagery database has grown too, counting 680 million photos while 2 million are being added every day.

Analyzing and processing of such large amounts of imagery is only possible using automated computer vision technology. In the early days, Mapillary’s imagery was processed automatically and stitched together into street-view imagery using artificial intelligence. However, by 2016, Mapillary’s clients soon wanted more than just street-view imagery, and demanded object recognition and classification. As an example, this would enable municipalities to use Mapillary’s street-view data to keep track of their traffic sign inventory and plan maintenance work. To make this happen, Mapillary needed to figure out a technology that could turn 2D imagery into 3D object location data. Additionally, the solution needed to be scalable so that lots of imagery could be processed and analyzed quickly.

Deep learning with PyTorch

For the first time, Mapillary would use a deep learning network to build models that were able to classify all objects inside the imagery. For this, a Python-based scientific computing package named PyTorch, released that same year was adopted, that uses the power of graphics processing units and is also one of the preferred deep learning research platforms built to provide maximum flexibility and speed.

Lorenzo Porzi, Mapillary Computer Vision Engineer

Looking back on the choice for a deep learning framework back in 2016, Mapillary’s Computer Vision Engineer Lorenzo Porzi states that PyTorch was ahead in many respects according to benchmarking reports that trained in multiple frameworks. Finally, Mapillary chose PyTorch because of its flexibility during the research phase, says Porzi.

“Flexibility in this context means that it can interacted with it at many different levels, even while it is computing you can change things in the network in every iteration. At the time, PyTorch allowed you to do a dynamic setup of the computational graph, something that wasn’t possible in Tensorflow. It was also important that we could embed Cuda, a parallel computing platform and programming model developed by Nvidia for general computing on its own GPUs, and run our models directly on the GPU”.

Object detection and creating 3D spaces

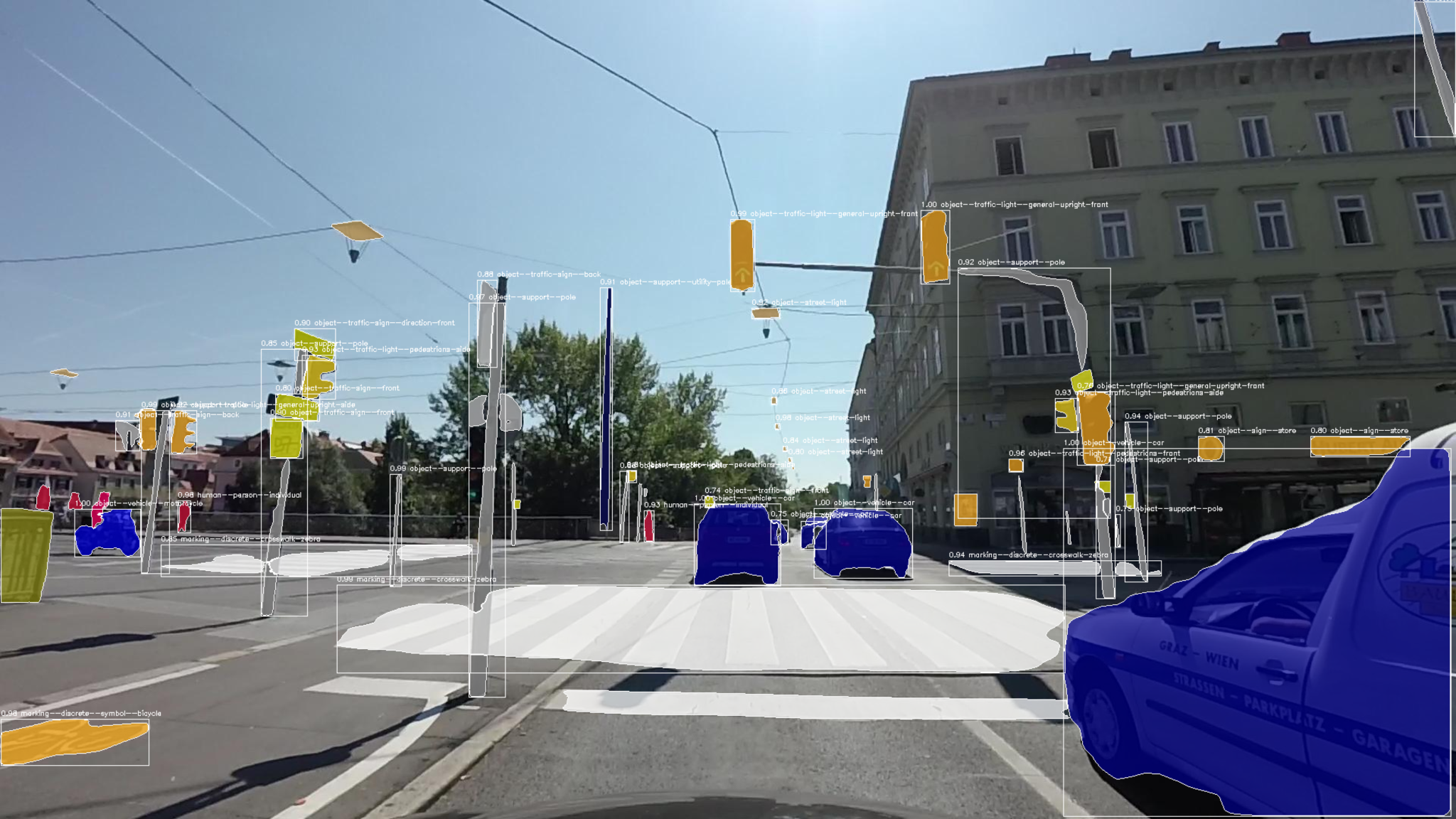

After object recognition and classification, clients started asking for geo-positioning of individual detections of objects in Mapillary’s images. Having a large imagery database made this job easier for Mapillary, because the more images there were available for a specific point, the more accurately it could be reconstructed. Porzi explains that together with 3D reconstruction, semantic segmentation enables 3D extraction of the positions of different objects such as traffic signs, and display them on a map.

“Mapillary runs semantic segmentation to detect different objects within the images, while Structure from Motion (SfM) is used to create and reconstruct places in 3D. By matching points between different images, SfM can locate an object in a 3D space and therefore determine its location on the map. For larger objects such as crosswalks, the center of the bounding box for that specific object is used as the map-based location.”

Estimating where objects are located, before they can be placed on the map as stand-alone map features required the algorithms to detect the same object from different images. To do this, Mapillary used their 3D models to connect the images across many different contributors, in order to provide us with the necessary localization information. Porzi explains that to create these 3D models, Mapillary’s own algorithms were run on the AWS cloud computing platform using OpenSfM, an in-house developed open-source Structure from Motion library, where thousands of matching points between images in the same geographic location are automatically identified.

These points give information about the 3D position of objects as well as make it possible to navigate through the images seamlessly on the Mapillary apps or web browser. The primary requirement to upload imagery to Mapillary’s platform is that the images are geotagged, which allows the images to be automatically positioned on the global map and made available for anyone to explore. Additionally, it is also possible to see the objects and map features detected in imagery using the Mapillary web app.