Advances in light field technology with the help of machine learning make it possible to quickly and easily create 3D photo-realistic views of complex real-world scenes and products.

Fyusion, a San Francisco-based machine learning and computer vision company, announced the release of software that uses novel techniques to capture detailed and complex 3D scenes with a minimum of user effort. The application of these techniques are targeted at retailers – allowing potential clients or consumers to get an impressively realistic view of products they intend to purchase, but could have broader applications for photogrammetry and other 3D capture fields.

The software from Fyusion makes strides in the area of light field technology, allowing users to render detailed and complex scenes with incredible realism.

Fyusion began with a group of four co-founders that were researchers in machine learning. They created Fyusion with the goal to providing 3D product capture and visualization solutions that could help consumers to make better buying decisions online. Stefan Holzer, CTO of Fyusion, emphasized that both the rendering time and the quality are important.

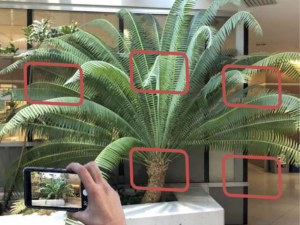

“The goal is to make it attractive for users to capture at 3D scene using handheld or consumer devices, and then – in a reasonable timeframe – provide them with a rendering of the object.”

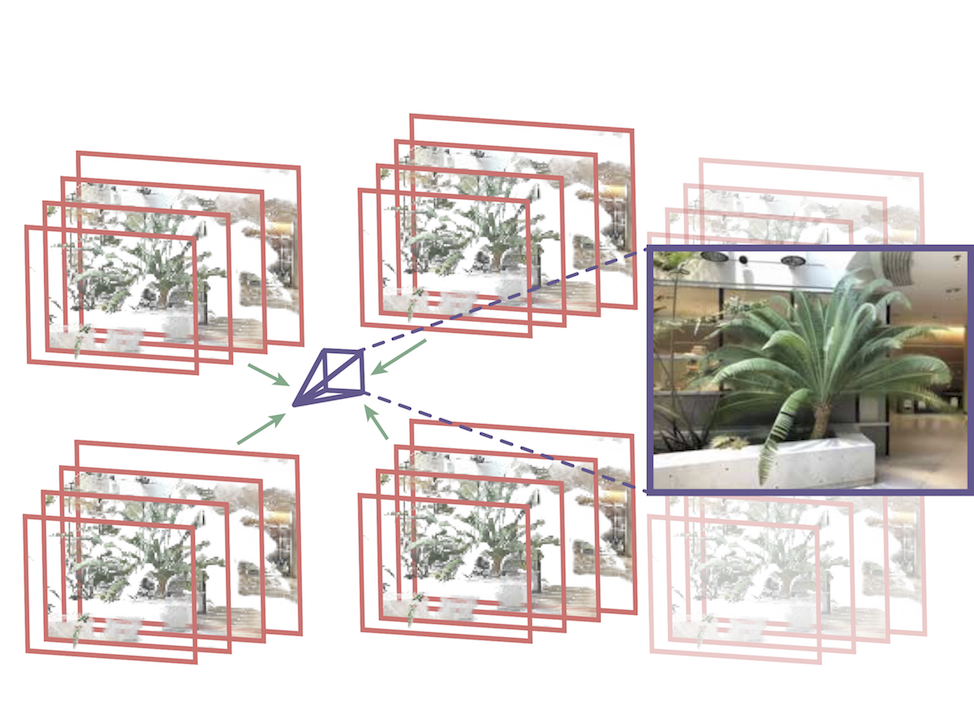

The theoretical framework for this technology was laid out in a recent white paper published in ACM Transactions on Graphics, a peer-reviewed computer-aided design, image generation and rendering-focused publication. The goal of the paper was to investigate how many ‘viewpoints’ are truly necessary to capture and to be able to render a good representation in 3D. Fyusion’s tool uses this calculation to guide a user to capture those viewpoints through the use of augmented reality that helps them to align the scenes and capture what is needed.

Once those viewpoints have been captured via smartphone or handheld device, Fyusion uses a deep learning image to convert a 2D capture into a multiplane image (MPI). Fyusion then synthesizes new 3D views by blending renderings from adjacent layered representations.

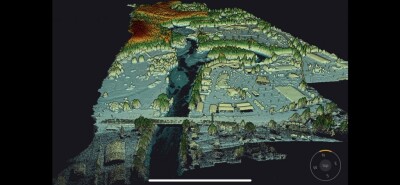

When multiple viewpoints have been captured in the prescribed grid, the results can then be combined into a format that can be navigated in 3D space. The resulting render needs approximately 4,000 fewer images to produce a single 3D image – bringing the time and cost down to a level that could be easily used by its target audience of retailers.

Despite the low number of images needed to create the 3D scenes, Fyusion is particularly impressive when used to scan transparent and reflective objects, something that traditional methods have struggled to accurately capture. In a statement from Fyusion, the company emphasized its photorealistic results.

“The software breaks new ground in the area of light field technology by allowing users to render stunning scenes with a degree of realism previously thought impossible.”

The technical process behind this process is described in the 5-minute technical video below.

The output of this effort is a viewer that can be used on mobile devices, but also on AR or VR viewers. When a user interacts with the output, they either move their head to look around (when using a headset), move the device (when using a smartphone), or move it with a mouse if being viewed on desktop.

Fyusion’s software is already being used by a number of commercial sectors, including the automotive industry, where its goal is to help retailers to increase and enhance engagement with shoppers researching (or making) their purchases online.

Earlier this year, Fyusion received an investment from Cox Automotive, a leading digital wholesale marketplace for used vehicles, which is using Fyusion’s software to display 3D images of cars on its websites. And in June, Fyusion announced a $3M investment from Itochu, one of the largest Japanese e-commerce fashion and apparel companies, which is using Fyusion to show images of models wearing outfits on its brands’ retail sites.

While the capture software is optimized for smartphones, it can be used on other capture devices. It can also be enhanced by the use of drones, says Hozier.

“A drone could go around the car in a programmed flight and get a 360 view, or could go in for a close up, etc.”

A preview of Fyusion’s technology has been posted on Github for public use and testing, and further information can be found in the online presentation supplements and at fyusion.com/immersive3D.