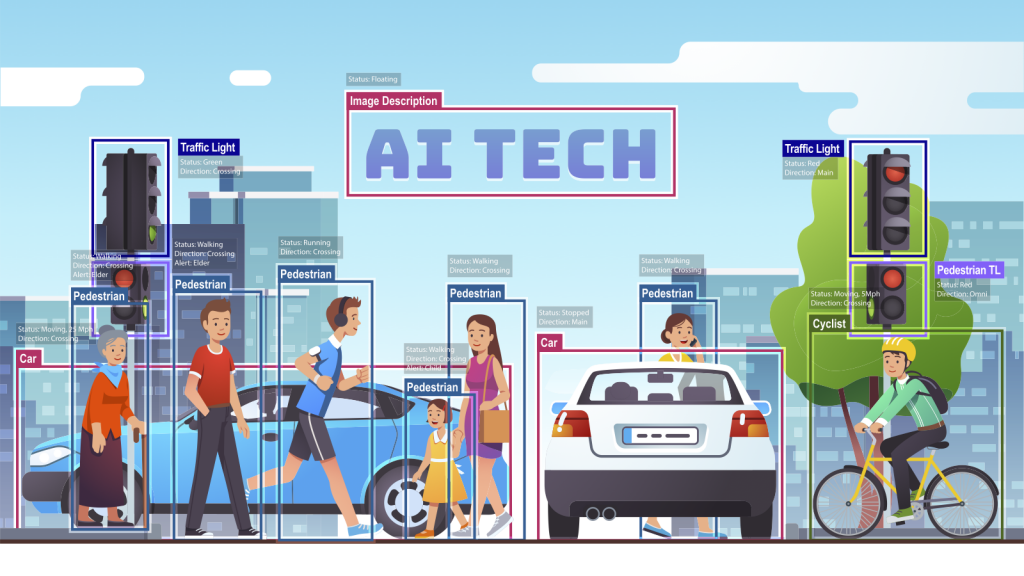

As the geospatial industry continues to witness significant advancements in technology, AI image recognition has emerged as a powerful tool with the potential to revolutionize how we extract, analyze, and interpret geospatial data. With the ability to automatically identify and classify objects and features in satellite imagery and lidar data, AI image recognition holds promise for applications in urban planning, environmental monitoring, disaster management, and more. However, despite these impressive capabilities, it is essential to acknowledge the existing limitations and challenges that hinder these technologies from achieving true omniscience. It is here where we separate the nonfiction from science fiction. What’s the current state of AI, and what is and is not possible?

In the latest report from Geo Week, industry experts explore these technologies and also give us the reality of their current readiness.

Image recognition in geospatial applications

Image recognition, when combined with geospatial data, GIS, and lidar, offers a powerful toolset for understanding and analyzing the Earth's surface. This integration enables advanced applications in fields such as urban planning, environmental monitoring, agriculture, and disaster management.

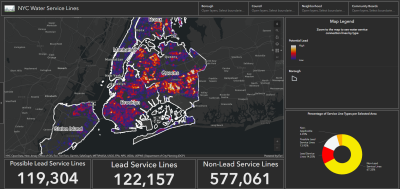

One prominent application is the extraction of geospatial information from satellite or aerial imagery using image recognition techniques. By analyzing these images, AI algorithms can automatically identify and classify various objects and features on the Earth's surface, such as buildings, roads, vegetation, bodies of water, and land cover types. This information can then be integrated into GIS systems to create detailed and up-to-date maps, perform spatial analyses, and support decision-making processes.

Take, for example, the ability to recognize features along municipal roads, something that might have previously been managed through a spreadsheet, or would require time-consuming in-person or virtual surveys with a human identifying each stop sign, curb, or sewer grate, says Aaron Morris of Allvision.io

“What [AI] can do at its very basic level is to take a lot of this data that is now readily available and start to mine it. Find me a thing that looks like this. And even if it is not ideal, it’s significantly better, in terms of a time perspective, than somebody virtually or manually walking through data and doing the work.”

Additionally, combining image recognition with lidar data enhances the accuracy and precision of geospatial analyses. By fusing lidar data with imagery, AI algorithms can extract additional information about the height, elevation, and structure of objects. This combination enables applications such as terrain modeling, flood mapping, forest inventory, and infrastructure planning, where both the visual and geometric properties of the landscape are crucial.

Additionally, combining image recognition with lidar data enhances the accuracy and precision of geospatial analyses. By fusing lidar data with imagery, AI algorithms can extract additional information about the height, elevation, and structure of objects. This combination enables applications such as terrain modeling, flood mapping, forest inventory, and infrastructure planning, where both the visual and geometric properties of the landscape are crucial.

“What you’re going to see is we’re going to start to ramp up. So the next cycle, you already know where things generally are, and you have some level of quality control. The next time an iteration occurs, the amount of time consumed to get into the higher quality will be less. A finer level of detail and finer granularity will come out of it. AI is now really getting us to the point where we can get a high frequency, reliable digital representation of the world, hence this digital twin concept,” adds Morris.

Furthermore, image recognition with geospatial data can aid in change detection and monitoring over time. By comparing images or scans taken at different times, AI algorithms can identify and quantify changes in land use, vegetation cover, and urban development. This capability is invaluable for monitoring deforestation, urban expansion, land degradation, and natural disasters. It enables timely interventions, facilitates environmental assessments, and supports sustainable management of natural resources.

For natural disasters, it can help support and rescue folks get on the ground sooner, and hone where they should target their first responses, says Asa Block of Near Space Labs.

“One of the things we do is provide highly recent, ultra-high-resolution imagery to analytic companies to enhance their algorithms and models for things like tarp detection. In a post-catastrophe situation, the first thing folks often do is put a blue tarp on their roof. When we fly and collect the data, it automatically gets processed via API into our analytic partners platforms and becomes readily usable by insurers. With this rapid information, insurers are actually able to proactively alert their insurance customers that, hey, here’s a building that very likely might have damage. And then when you get to an even more granular level, we can say, Hey, there’s a building here that used to have all the shingles on the roof, and now there are 10 shingles missing.”

While this technology can yield information that would have taken many more human assets to collect and analyze in the past, it is important to understand that AI is still not at a point where it can do everything on its own, says Block.

“In the insurance field AI can, and is already, helping improve the efficiency of underwriting and claims teams. When property inspections are needed, a combination of data sets like Google Street View, or high-resolution imagery like we provide, can tell carriers a lot of information so that a human assessor can make a decision. Do they need to go to that house, or do they have enough information to make the decision remotely? The help of AI allows productivity to scale up, allowing humans to deploy resources to other important things.”

Despite significant advancements, AI image recognition and classification still face several limitations in their current abilities. Firstly, the accuracy of these algorithms heavily depends on the quality and diversity of the training data. Insufficient or biased datasets can lead to inaccurate classifications and limited generalization to new environments. Moreover, contextual understanding remains a challenge for AI models, as they often struggle to grasp the complex relationships and spatial context between objects within an image. This limitation can result in misinterpretations and erroneous classifications.

Additionally, the interpretability of AI image recognition systems is limited, making it difficult to understand the reasoning behind their decisions, leading to concerns about transparency and trustworthiness. Handling uncertainty and ambiguity in geospatial images also presents challenges, particularly in complex or cluttered scenes where objects may exhibit variations in appearance. Lastly, ethical considerations, including privacy concerns and the potential for biases, need careful attention to ensure fair and equitable deployment of AI image recognition technology. Addressing these limitations is vital for further progress and to enhance the reliability and effectiveness of AI image recognition and classification systems.

Learn more about what industry experts have to say - both about the potential for the technology to be transformative, but also what may slow its progress - by downloading the free report today.