Want to develop apps for ARCore? Google has just announced that the Depth API is available in ARCore 1.18 for Android and Unity, including AR Foundation, making it open or use across nearly all Android devices, no depth sensor required.

Over the last year, we’ve seen more than a few headlines about the potential ability for handheld mobile devices with depth sensors to begin to create 3D scans in various ways – some creating them by translating 2D photos into depth using sophisticated algorithms, some relying on new state-of-the-art depth sensors to get the information that they need for mapping.

According to the ARCore developer documentation, ARCore is using a SLAM process to understand the phone’s location relative to what it is looking at.

” ARCore detects visually distinct features in the captured camera image called feature points and uses these points to compute its change in location. The visual information is combined with inertial measurements from the device’s IMU to estimate the pose (position and orientation) of the camera relative to the world over time.”

At the end of last year, Google shared some details and asked for collaborators to join a ‘preview’ of ARCore Depth API, which derives depth via the motion seen by a single camera. These depth-from-motion algorithms have been tested by these initial collaborators and several are now available as apps to download.

An example of occlusion after generating a depth map with an Android device. (Image credit: Google)

What started as an interesting follow-on to the defunct Google Tango has now been touted by Google as being a step towards creating better and more realistic and responsive augmented reality experiences. The key problems that ARCore is attempting to remedy surround the placement of augmented reality objects in real world space. In most AR apps, augmented reality features can only be placed onto or in front of real-world objects (e.g., put a 3D model on a table, for example), but do not have enough information to process things like occlusion – when an AR object should go behind something in frame.

The key capability now available in the Depth API is the ability for this occlusion to happen seamlessly, making objects fell as if they are actually in the space with you, rather than on top of it. The original collaborators included game developers, Snapchat and other companies – several examples of their tests and products are featured on the Google Developers blog describing the new ARCore update.

One particular app that showcased more of the potential of this technology is Lines of Play, another product of the Google creative Lab. In Lines of Play, you can set up dominos that will react depending on how they are arranged and what they encounter (e.g., if you place an obstacle in their way, they will not continue falling).

Impact on 3D Enterprise/Industry

While it may be easy to dismiss this development as one that is only helpful to have Pokemon hide behind your couch, there are a few ways in which this could prove to be a significant leap in AR development for non-gaming uses as well.

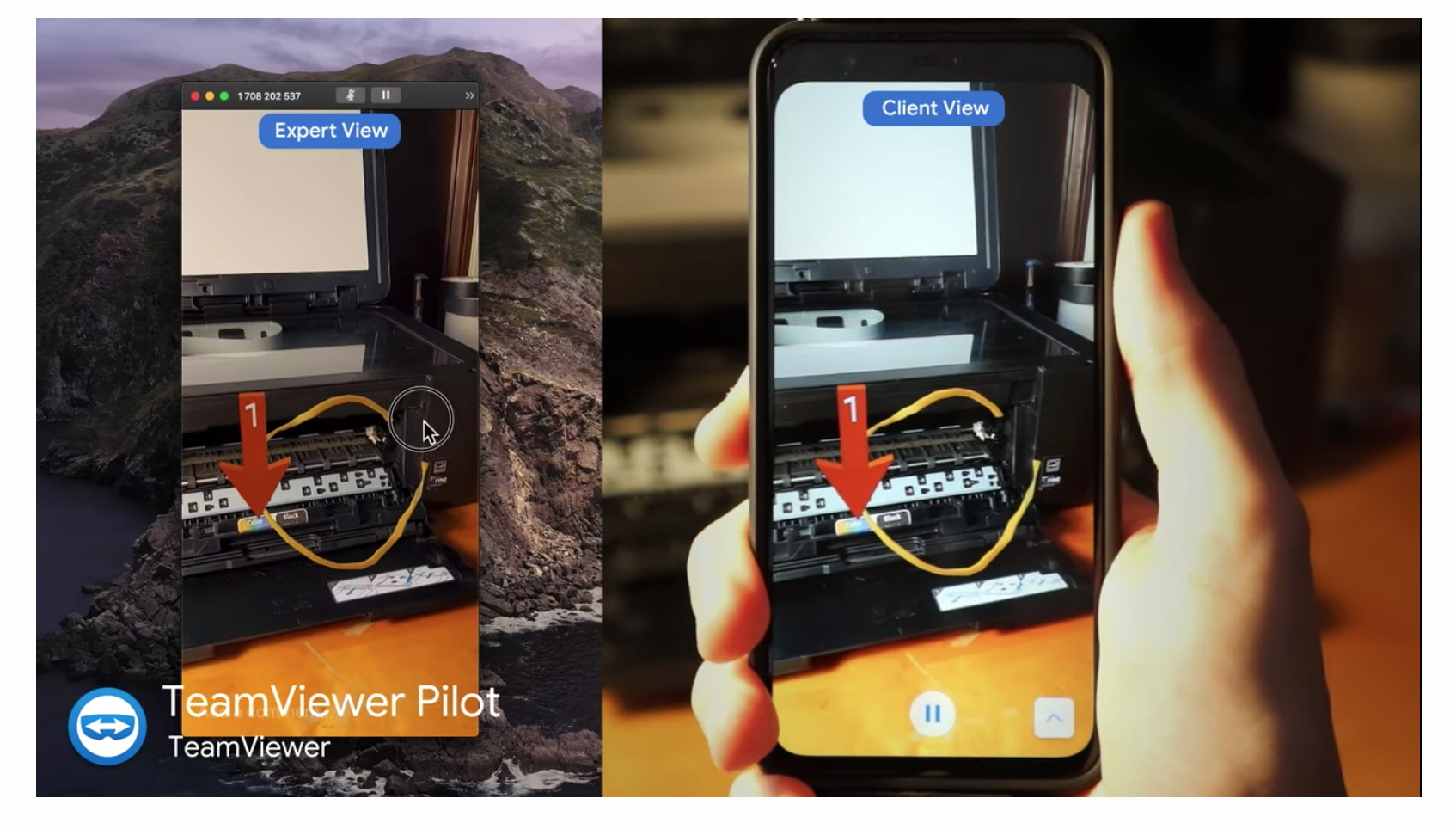

On the enterprise side, there are applications for training and screen sharing platforms, including TeamViewer Pilot, where more accurate annotations on complex parts and scenes could propel it into being a powerful tool for virtual collaboration in a variety of industries. Several use cases such as the Streem app (for remote collaboration, training, communicating), are outlined as ARCore development use cases.

Being able to rapidly assess a scene’s depth – without specific depth-sensing sensors – and then place objects in AR within them opens up a world of possibilities. What if you could add specific navigation signs within a jobsite via AR? What if you could annotate a particular bolt that needs to be removed by a technician in a complex pump assembly? What if you could better model line of sight when planning for industrial spaces with AR-placed equipment? The fact that there is an open SDK for this opens up a lot of potential for new 3D applications beyond entertainment and gaming. I would be on the look out for more interesting apps for a variety of verticals coming out in the next year taking advantage of this new tech.

To learn more and get started with the ARCore Depth API, the SDK and ARCore developer website provide more details.