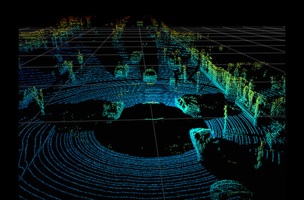

There’s a lot at work in the time of flight sensors that autonomous cars use to sense obstacles in their environments; these sensors look for precise timing, wavelength, and duration in the pulses that return. They won’t accept just any bit of light as a valid input. Which is what makes it so amazing that you can fake out an automotive LiDAR for the cost of a few pizzas.

Jonathan Petit, Principle Scientist at Security Innovation and a longtime researcher of potential cyberattacks on automated vehicles has found a way to “hack” an automotive LiDAR sensor for under $60.

An article in IEEE describes the simple rig, which involves nothing more than a low-power laser and pulse generator. “It’s kind of a laser pointer, really,” he told the publication. “And you don’t need the pulse generator when you do the attack. You can easily do it with a Raspberry Pi or an Arduino. It’s really off the shelf.”

Petit recorded pulses from a commercial automotive LiDAR unit and “replayed” them to the sensor later with the correct timing. Doing this, he was able to convince the LiDAR unit that an obstacle was in its way, or even that a huge number of obstacles were in its way. He faked cars and pedestrians at different distances. He made them move. Petit explained to IEEE that he was able to fake thousands of obstacles and overwhelm the LiDAR sensor so it couldn’t track real objects. And he didn’t even need to target the LiDAR precisely on the unit.

Petit emphasized that he did not set out to prove that this manufacturer made a poor product, but rather to demonstrate that manufacturers and consumers alike need to start considering the security of the sensors that go into automated vehicles. When LiDAR is used outside of this application—for measurement, or data capture—the idea of having your device hacked seemed quaint. Who would want to do that? To what possible end? On top of that, the systems used for more precise data capture are much complex, and would require a great deal more effort to hack. Once you start creating simplified systems to put on autonomous cars, however, they become a way for someone to hack the car and endanger the lives of its occupants.

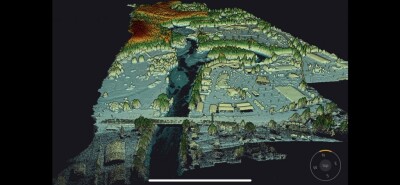

As part of Petit’s research at his former gig in the University of Cork’s Computer Security Group, he spent a lot of time researching the systems that automate self-driving vehicles. He found that nearly every system could be “hacked” in a similar way. You can trick a car’s machine vision by blinding its cameras, spoof its GPS signal, infect its on-board computer with malicious programs, chaff its radar, and even “poison” its maps.

The situation isn’t totally hopeless, though. These sensor systems can be designed to work together, with their sensor data being fed into a “data fusion” software that decides the best course of action given all the inputs. This means that the effects of one system failing wouldn’t be totally catastrophic as long as it can compare that system’s output to the output a few other systems.

An example: If the LiDAR sees a hundred cars in front of you, but the machine vision cameras don’t, and the radar doesn’t, then the car knows that the LiDAR is wrong and acts accordingly.

Petit’s main goal, he explains, is just to show LiDAR manufacturers and car manufacturers that this kind of exploit is possible, and it’s something they need to protect against. As he told IEEE, “this might be a good wakeup call for them.”