Last week some researchers from Microsoft, Cambridge, Stanford, and Nankai unveiled a system called SemanticPaint that “allows users to to simultaneously scan their environment, whilst interactively segmenting the scene simply by reaching out and touching any desired object or surface.” In other words, the system allows you to model as you scan.

Here’s how it works

First, you walk through an environment with a 3D depth camera like a Kinect and an RGB sensor. As you do this, the system generates a unified scan from the camera in real time.

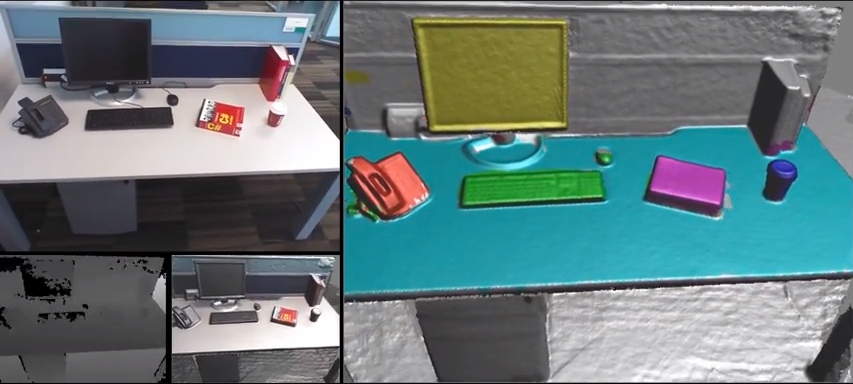

Let’s say you see an object you’d like to segment and label. You’re in the office and you’d like to label your chair–simply reach out, circle the chair with your finger, and say “chair.” SemanticPaint will use RGB and geometry data to guess the limits of the chair. Next, the system segments the chair off, color codes it, and labels it. You can do the same for your coffee mug, your books, and so on.

This is where it gets interesting. As you label, you’re training SemanticPaint to work on its own. Now that you’ve told it what a chair looks like, it looks within the environment for other chairs. If it finds an object that looks like a chair, SemanticPaint segments it off, color codes it, and labels it. Just as if you had selected it yourself.

What if SemanticPaint misses a chair while it’s labeling the rest of the room? When this happens, just label the chair manually. SemanticPaint now updates its learned model of what a chair can look like, which allows it to handle more variation in shape or appearance. This is how it improves.

When you’re done modeling the room this way–labeling your chairs, books, and so on–you’ve trained SemanticPaint to recognize and model the kinds of objects that are in an office. Take it to your neighbor’s office and it will likely model the whole thing perfectly without any extra input. What’s enticing about this is that you can train SemanticPaint to model any kind of objects you want–train it to model pipes, and it will become good at modeling pipes.

In actual use, SemanticPaint works surprisingly well. For one, the system is fast. Researchers note that “a reasonably sized room can be scanned and fully labeled in just a few minutes.” On top of that, the system isn’t very hard on a computer. It can run “at interactive rates on a commodity desktop with a single GPU.”

Lastly, and most importantly, SemanticPaint correctly categorizes a depth point about 90% of the time. Not perfect, but not bad for a first try.

Problems

It’s a brand new technology, and still in the early testing phase, so obviously there are a number of limitations. SemanticPaint has trouble with segmented objects like a mouse or a keyboard. It also has trouble recognizing objects when the RGB data and the depth data are improperly calibrated. Lastly, it’s entirely local. This means it doesn’t take into account any global context, like the relationships of objects to each other.

Even so, it’s important to note that the basic idea holds up: A human operator can teach a machine-learning system how to recognize objects, and do it in real time. It’s not very difficult to get excited about the possibility of interfaces like this.

Possible Future Uses

The researchers said that their system works directly on the depth data “as opposed to requiring lossy and potentially expensive conversion of the data to depth image, point cloud, or mesh-based representation.” But in reading through the paper, I don’t see any reason it couldn’t work directly on some high-quality point cloud data as well (and correct me if I’m wrong).

Thinking about that possibility brings up an interesting use case. Imagine using SemanticPaint with a point cloud that has been loaded into an Oculus Rift. The next version of this VR headset is likely to recognize your hands as you’re wearing it, so it could also see when you “swipe” an object. With this set up, you could treat the point cloud environment like you were in the real world. Simply move through a scan of your facility, or bridge, or airplane, and get labeling.

SemanticPaint’s researchers also offer a few futuristic possibilities of their own. They note that you could use SemanticPaint to create indoor maps for robots. With such a map available, you could tell a robot to get your cup for you. If the map were updated often enough, you could ask it where you left your keys.

The big question, to my mind: What happens once we start loading SemanticPaint on cell phones loaded up with depth cameras? We might start modeling everything.