Given all the recent controversy about the safety of autonomous vehicles and semi-autonomous vehicles, I was happy to read a bit of good news. Google’s recent monthly self-driving car report for June announces some big advancements in the way that their cars use LiDAR data to recognize cyclists.

After some time observing cyclists on the roads and on their private test track, Google has taught the car’s software to read sensor data and “recognize some common riding behaviors.”

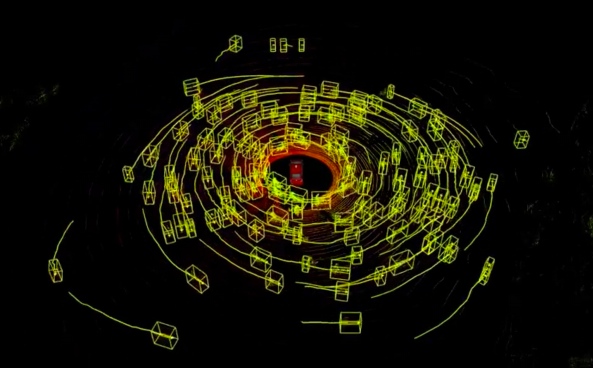

If a cyclist is turning left from the right side of the lane, for instance, she will often use a hand signal to indicate the move ahead of time. Google’s car reads the hand signal and gives the cyclist her space by slowing to let her merge. The car’s software is also designed to “remember previous signals from a rider so it can better anticipate a rider’s turn down the road.”

Google’s self-driving car recognizes a hand signal. Source: Google

Why is this a big deal? The movements of a cyclist are harder to predict than automobiles, and Google’s self-driving cars couldn’t always do it. The cars have always erred on the side of caution—which means until now they’ve been so conservative until now that a cyclist doing a track stand could confuse the car into freezing in place.

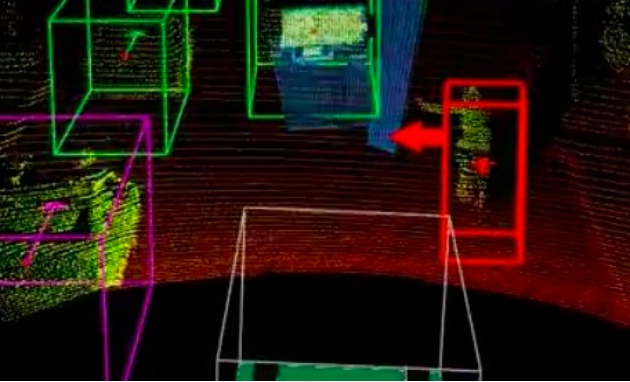

Now, using machine learning techniques, Google has taught their software to recognize many kinds of cyclists on many kinds of bikes. It might even make these cars better at driving around cyclists than human drivers are.

A Case for LiDAR

This makes a pretty good case for LiDAR in autonomous and semi-autonomous vehicles.

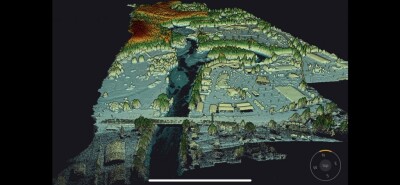

Let’s back up to explain. Google’s car is equipped with a camera that has a limited field of view, and cyclists are often located in places the camera can’t see. The car’s LiDAR sensor, on the other hand, can read the environment in 360 degrees, which helps the car to recognize cyclists even if the driver doesn’t.

Would a 360-degree camera be enough, like Tesla CEO Elon Musk claims? Maybe. But LiDAR would still have the advantage, given its ability to sense through certain kinds of weather that cameras can’t, and its ability to see through bright light and darkness.

Simply put, LiDAR is a valuable addition to any self-driving car (or semi-autonomous car). At worst, it adds redundancy to a system by doubling the radar’s depth data, and doubling the camera’s imaging data. At best, it adds a few extra data points to the mix by sensing things that radar and camera can’t.

When numbers show that 50,000 cyclists were injured and 720 cyclists were killed on American roads in 2014, it seems like a no-brainer to use these sensors–especially considering the way that LiDAR prices are dropping off very sharply. I mean, what’s a few sensors when we’re talking about saving a human life?