We here at SPAR have obviously been covering driverless cars (or autonomous vehicles) for some time now. We had a presentation titled “Autonomous Vehicles from NIST’s Intelligent Systems Division” at SPAR 2005. It was pretty cool for the time, using Sick lasers and flash lidar and multi-camera systems to create some working prototypes and at least figure out what the issues were.Â

By 2007, though, things had moved along quite a bit. SPAR was on the scene for the final round of the DARPA Challenge, which pitted 11 organizations against one another to figure out who had the best-performing autonomous vehicle navigating city streets with intersections and human drivers in the mix. Carnegie Mellon scored the top $2million prize, followed by guys at Stanford and Virginia Tech. Just three years prior to that, in the first Challenge event, 15 vehicles started the race, and not a single one finished. And that was a desert course without any traffic.

It’s likely some of the ideas developed in those challenges wound up in Lockheed Martin’s Squad Mission Support System, which we profiled last year, and is out in the field right now, following soldiers around and acting as a mule.Â

Pretty clearly, the technology is moving forward at a rapid pace. Still, I was a little surprised last fall when Sergey Brinn went public in a major way about Google’s driverless car initiative, and I was similarly surprised this week to read the MAMMOTH article in Wired about driverless car technology and its looming commercial availability.Â

Or should I say current commercial availability. Even being in the business, I guess I wasn’t aware of just how far the use of lidar and 3D data acquisition has come in the motor vehicle industry.Â

Maybe it’s because I’m not often in the market for Mercedes and BMWs, but their high-end cars are already making it so you can’t veer out of your lane or hit a pedestrian or have to cut off your cruise control when you roll up on some grandma going 50 on the highway. And those of you living in the San Francisco area have apparently been dealing with driverless cars pretty frequently lately – Google cars had logged some 140,000 miles already by 2010. It’s certainly well into the millions now, and Tom Vanderbilt, author of the Wired article, does a fantastic job of projecting the wonder and desirability of these lidar-shooting driverless vehicles.

Vanderbilt had been in Stanford’s car in 2008, doing 25 mph on closed off roads. Now:

“This car can do 75 mph,” Urmson says. “It can track pedestrians and cyclists. It understands traffic lights. It can merge at highway speeds.” In short, after almost a hundred years in which driving has remained essentially unchanged, it has been completely transformed in just the past half decade.

It really is amazing. Just this video alone kind of blows my mind:

Â

Pretty awesome for a 15-second video, right?

But perhaps the clearest indication to me, as a technology writer for the past seven years, is that this stuff is starting to become less and less secretive and more and more matter of fact. The first time I interviewed Velodyne president Bruce Hall, in January of 2011, he would only hint that the giant spinning soda cans on top of the Google cars were his. Now, we get this matter-of-fact statement from the Wired article:

Google employs Velodyne’s rooftop Light Detection and Ranging system, which uses 64 lasers, spinning at upwards of 900 rpm, to generate a point cloud that gives the car a 360-degree view.

I certainly couldn’t put that in my article at the time. But then maybe if I’d been writing for Wired… Just kidding. This happens all the time. Usually, the big company doesn’t want the little company bragging that the big company just bought a bunch of its stuff. And that’s usually because the big company isn’t sure the little company’s stuff is going to work exactly right and they might want to scrap the whole project. At this point, with Brinn doing his crowing, this project isn’t going anywhere.Â

Nor is it clear, though, that lidar is going to be the data capture method of choice for autonomous cars. Check out what Mercedes is working on:

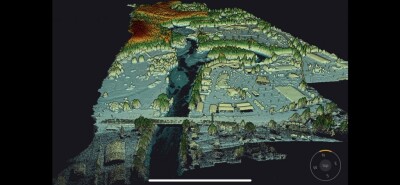

That’s why Mercedes has been working on a system beyond radar: a “6-D” stereo-vision system, soon to be standard in the company’s top models … As we start to drive, a screen mounted in the center console depicts a heat map of the street in front of us, as if the Predator were striding through Silicon Valley sprawl. The colors, largely red and green, depict distance, calculations made not via radar or laser but by an intricate stereo camera system that mimics human depth perception. “It’s based on the displacement of certain points between the left and the right image, and we know the geometry of the relative position of the camera,” Barth says. “So based on these images, we can triangulate a 3-D point and estimate the scene depth.” As we drive, the processing software is extracting “feature points”—a constellation of dots that outline each object—then tracking them in real time. This helps the car identify something that’s moving at the moment and also helps predict, as Krehl notes, “where that object should be in the next second.”

That’s videogrammetry at work, folks, and it may very well be that the cameras and software are cheaper to use, or more effective, than the lidar. Maybe the lidar is creating too much information. Only the real guys in the trenches know the answer to that.Â

When things will get really cool is when all the cars are also rocking wifi and can communicate with each other in real time, so they can give each other virtual eye contact and virtual waves to let their autonomous counterparts go ahead. It’s really ancillary to the 3D question, but I had to pass along this vision of the intersection of the future that was brought to us by Atlantic Cities, riffing on Wired’s article:Â

Â

Pretty hard not to just stare at that forever, right?

As for whether people actually WANT autonomous cars, I’ll leave that to the writers of those above linked-to articles. I have my own ideas, but they’re probably not worth a whole lot. I will say, however, that Vanderbilt did get himself a great quote from Anthony Levandowsi, business head of Google’s self-driving car initiative:

“The fact that you’re still driving is a bug,” Levandowski says, “not a feature.”