The geospatial industry has always been centered on data, and the demands for speed, efficiency, and accuracy are only increasing. While AI has been touted for workflows to increase some of that efficiency lost, the processing needs of AI can exceed the capabilities of typical hardware. Meeting these challenges will require not just better software, but smarter hardware. One emerging technology with the potential to reshape how data is collected and processed in the field is the neural processing unit (NPU), a specialized chip built specifically to accelerate artificial intelligence (AI) tasks.

While “neural processing” may sound like another tech buzzword, NPUs represent a genuine architectural leap forward. Their design allows them to handle complex neural network operations that would otherwise overwhelm CPUs or GPUs. For geospatial applications where vast quantities of sensor, image, and point cloud data must be processed quickly, this could be transformative.

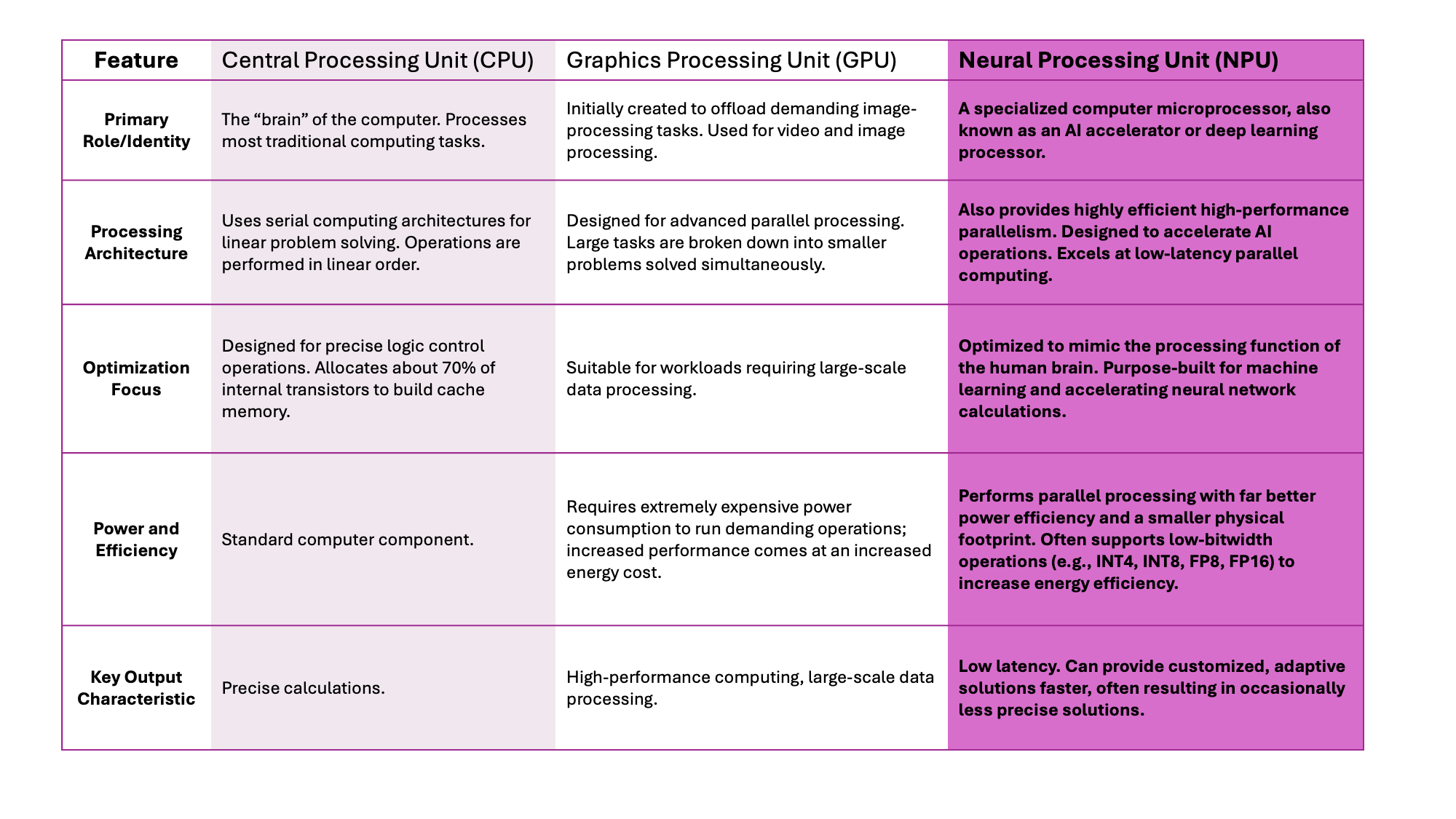

NPUs are engineered to mimic how neurons and synapses interact in the human brain. This enables them to execute deep learning instruction sets directly on the hardware, reducing the need to offload heavy processing tasks to desktop computers or the cloud. Compared to GPUs, NPUs are lighter, more power-efficient, and purpose-built for AI workloads. That makes them particularly appealing for rugged, battery-powered devices used in surveying, mapping, and inspection.

What Exactly Is an NPU?

A neural processing unit (NPU) is a specialized microprocessor, also known as an AI accelerator or deep learning processor, designed to execute artificial neural networks and other machine learning workloads. Unlike general-purpose CPUs or GPUs, NPUs focus on performing the tensor and matrix operations that underpin AI inference and training. This focus makes them faster and more efficient for real-time, data-rich tasks like computer vision and object recognition.

Where NPUs Make a Difference

NPUs excel at low-latency parallel computing, breaking down large problems into smaller components that can be processed simultaneously. This makes them well-suited for interpreting fast-changing sensor or imagery inputs in real time, which is a capability increasingly vital for autonomous systems and field-based geospatial tools.

Their efficiency also makes them ideal for edge devices, instruments that need to make intelligent decisions in the field without constant connectivity. For example, the newly-released Leica TS20 features onboard NPUs to enable faster AI-driven processing directly on the instrument.

Beyond efficiency, NPUs can unlock advanced modeling techniques like Neural Radiance Fields (NeRFs) an emerging method for reconstructing 3D scenes from 2D images. NeRFs can infer geometry even in environments with poor lighting, reflective surfaces, or complex structures, where traditional photogrammetry would struggle. Accelerating NeRF computations with NPUs could allow surveyors and mappers to build more detailed, realistic 3D models directly in the field.

The Bigger Shift

The integration of NPUs into tools like the Leica TS20 signals a major shift toward more autonomous, intelligent, and connected equipment. By pushing AI capabilities closer to the source of data collection, NPUs reduce latency, improve efficiency, and open the door to on-device learning and adaptive decision-making.

NPUs may still be in the early stages of adoption, but their potential to reshape geospatial workflows seems clear. As sensors and instruments become smarter and more capable, the distinction between data collection and data analysis will continue to blur. The industry is moving toward a future where devices don’t just capture information, they interpret and act on it in real time. For geospatial professionals, that means a faster, more intelligent path from measurement to insights - and something to follow closely in the next few years.