By Stéphane Côté, Fellow at Bentley Systems

Augmented reality (AR) is a fascinating technology that could change the way we live and interact with the world that surrounds us. However, achieving good visual integration of digital elements and reality is very challenging. Unfortunately, this means that engineering applications of AR are especially challenging, due to the importance of accuracy in that field.

Why AR accuracy is a challenge

For a tablet display to show the virtual elements at the right location, the AR app must know the position of the tablet in real time. That problem is rather hard to solve accurately on a handheld device because it may require heavy calculations and multiple sensors, and these are not easily provided in a device limited by CPU power, battery capacity and size…

To solve this problem, early solutions used markers (QR codes) to perform tracking. The tablet’s camera captures live video of a scene that shows a marker, and the AR app calculates the tablet’s position based on the shape and orientation of the marker captured on the video. Markers work quite well, but generally to be of any use they must be visible in the camera view – so you may need to install and survey many of them.

Later, more advanced computer vision techniques made tracking without markers possible. Those rely on visual features, such as edges and corners. They save you from installing markers, but of course all those techniques being based on video, factors like poor lighting conditions or low contrast sometimes result in augmentations that appear “shaky” or that are displayed at the wrong location. Although recent progress has been immense, science has not yet solved that camera tracking problem robustly enough for “anywhere” augmentation.

A better solution?

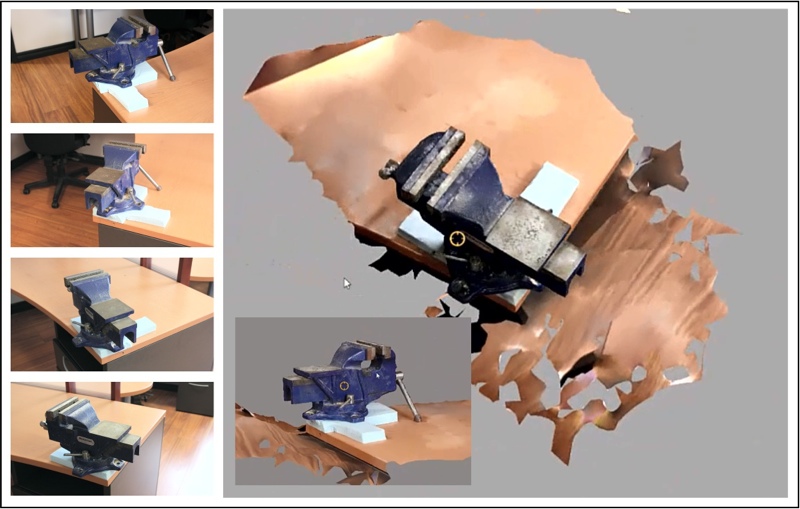

Knowing the camera position is useful for applications beyond AR. Take, for instance, the technology that converts photos into meshes, such as Bentley Systems’ ContextCapture technology.

A model of a vice generated with ContextCapture

To achieve such meshes from photos, the ContextCapture process must first go through a step called “aerotriangulation” (AT), a process that matches photo features and accurately establishes the relative position and orientation of each photo. The process is offline, and can take several minutes to complete – but it results in very accurate estimates of… the camera position! So we thought: why couldn’t we use those position estimates to augment the corresponding photos?

To test our hypothesis, we tried the following:

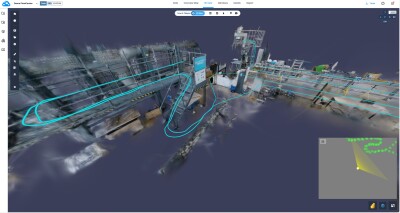

- We flew a drone around our local Bentley office, capturing video during the construction of an extension to the second floor.

- We extracted all the frames from that video, and used our ContextCapture technology to create a 3D mesh of the scene.

- We then aligned the resulting mesh with a BIM model of our building.

- Finally, we used the calculated positions & orientations to augment each frame with the BIM model.

Results show a very steady augmentation, well aligned from frame to frame:

AR for change detection

Of course, this can’t exactly be called augmented reality – because it is not live. The augmentation took several minutes to compute, because of the AT process. On the other hand, it resulted in very steady augmentation.

Though not live, this technology could be very useful on a building site. Think, for instance, of monitoring the site on a daily basis, trying to identify delays or mistakes in the construction process. The accurate overlay of the BIM model with reality would enable easy localization of discrepancies, and facilitate the identification of those mistakes.

Here’s how that could work. One could have a drone flying around the site on a regular basis, taking photos and uploading those to the cloud, where the 3D mesh would be continuously generated and aligned with the BIM model. The photos would then be automatically augmented with the BIM model, and be available for download a few minutes later.

A user could view those augmented photos from a remote location, and easily identify delays or mistakes in the construction process. Not only would this technique save him or her several visits to the site, but there are other benefits as well. It would also enable more frequent monitoring, the augmentation would likely be much more steady and accurate than using handheld augmentation on site, and it would be available from a multitude of vantage points that one could not reach while walking around the site using live AR on a tablet…

Such a solution would do a great job for large infrastructure projects, at least as far as the outer shell of the asset is concerned. Now, it is not uncommon nowadays to see drones equipped with range sensing technology, that can make them fly inside buildings, “seeing” and avoiding obstacles, and find their way by creating a map. I am sure you can imagine what lies ahead…