Considering all the ways the Kinect has been hacked so far, it was probably only a matter of time before the minds at MIT put the toy to good use. And their first shot out of the gate is pretty impressive.

Like a number of organizations, they’ve attacked the challenge of indoor mobile mapping (in a GPS-denied environment). While others have used quadcopters and carts, MIT has gone with the human-carried platform, as outlined in this paper, prepared for the International Conference on Intelligent Robots and Systems 2012, coming up next month in Portugal. They developed the system with money from the Air Force and Navy, so it’s pitched as being good for search and rescue and other security/safety operations. Worn over the torso, the package includes:

A Microsoft Kinect RGB-D sensor, Microstrain 3DM-GX3-25 IMU, and Hokuyo UTM-30LX LIDAR. The electronics backpack includes a laptop, 12V battery (for the Kinect and Hokuyo) and a barometric pressure sensor. The rig is naturally constrained to be within about 10 degrees of horizontal at all times, which is important for successful LIDAR-based mapping (see Section V-B). An event pushbutton, currently a typical computer mouse, allows the user to ‘tag’ interesting locations on the map.

The Hokuyu is more of a rangefinder than a “laser scanner.” It’s got a range of 30 meters, though, and a field of view of 270 degrees, so, like the Sick scanners, people are using them for 3D data capture even though the manufacturers might not market them that way (note, I love the disclaimer at the bottom of the UTM-30LX page: “Hokuyo products are not developed and manufactured for use in weapons, equipment, or related technologies intended for destroying human lives or creating mass destruction.” That’s comforting).

The MIT team also use SLAM technology to piece all the information together. However, unlike the Viametris solution, for example, there are no wheels that could provide odometry, so that can’t be used to figure out where the wearer of the little backpack is (the IMU is being used to account mostly for pitch and roll, from what I can tell). Also, their solution was tasked with dealing with multiple floors and the fact that the wearer might tilt his or her body all the time, making the pose less than fixed.

How did they solve these problems? “Our system achieves robust performance in these situations by exploiting state-of-the-art techniques for robust pose graph optimization and loop closure detection.”

Well, obviously. Seriously, though, you’ll have to read the paper if you want the gory details. They’re all there, and I’ll readily admit some of it’s over my head. One of the cool things, though, is how they’re using the Kinect (which you might be wondering about, since they’ve also got the lidar on board). Basically, they’re using the Kinect to drive a feature-recognition system. The Kinect builds a database of images that the system then checks against all the time so that it knows when a user is traversing the same terrain over again. If so, the map is updated with more accurate information. In this way, the maps get better with repeated travels.

And what about that barometer? Well, since stairwells are relatively featureless and users tend to whip around corners, the system uses the barometer to tell it when the user has traveled to another floor.

I haven’t seen that before.

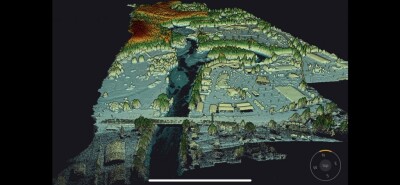

Want to see the data in action? Here’s a brief video MIT put together to tout the system. You’ll get the general idea:

Not bad, right? It’s more of a 2D map they’re creating, but there are other images in the paper of exports that have multiple floors and look more 3D when you navigate them. This kind of multi-sensor integration is the wave of the future, in my opinion. By combining more (though possibly less expensive) sensors, the data will be improved while bringing the cost down, hopefully, making the technology more accessible.