A team from the Dalle Molle Institute for Artificial Intelligence and the Robotics and Perception Group at the University of Zurich have developed a powerful way for drones to navigate autonomously. They’ve taught the drone to recognize what its camera sees.

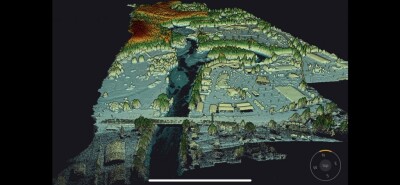

Generally, drones don’t use their camera for sense-and-avoid. Systems like the new DJI Phantom 4 use additional sensors like LiDAR or sonar. Unfortunately, these sensors are either cost-prohibitive compared to a camera, or not sufficiently effective to warrant the extra expense.

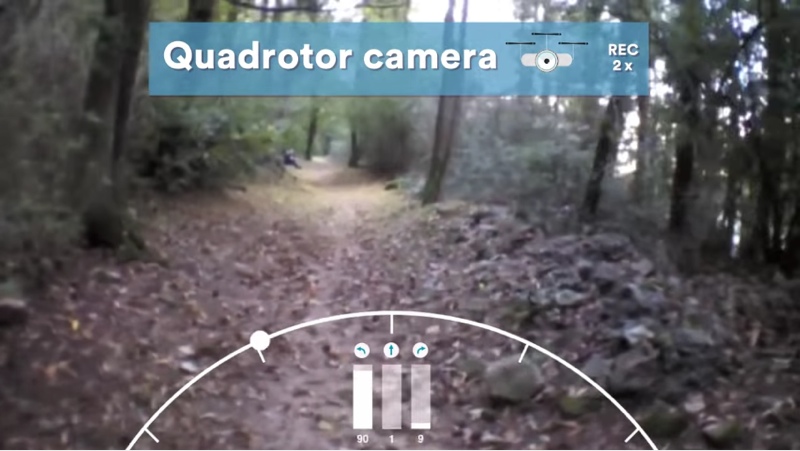

This team used the “monocular” image captured by a single camera onboard a drone to enable that drone to perceive forest and mountain trails for navigation.

Instead of using low-level features such as contrast, the team designed the system using a “different approach based on a Deep Neural Network used as a supervised image classifier. By operating on the whole image at once, our system computes the main direction of the trail compared to the viewing direction.”

Again, this means that drone recognizes what its camera is capturing, and makes informed decisions about how to proceed just as a human being would. If the trail is straight ahead, the drone speeds up. If the trail turns to the left or right, the drone slows down and turns itself to stay aligned.

Surprisingly, the drones equipped with this system are shown to “yield accuracy comparable to the accuracy of humans which are tested on the same task.”

For more details, see the video.