How’s this for a paragraph to get you excited?

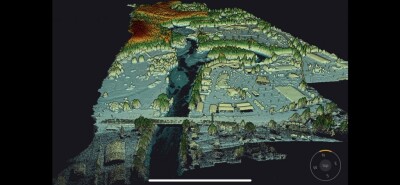

Now imagine a device that provides more-accurate depth information than the Kinect, has a greater range and works under all lighting conditions — but is so small, cheap and power-efficient that it could be incorporated into a cellphone at very little extra cost. That’s the promise of recent work by Vivek Goyal, the Esther and Harold E. Edgerton Associate Professor of Electrical Engineering, and his group at MIT’s Research Lab of Electronics.

A laser scanner in my cell phone? Sign me up!

Unfortunately, that paragraph might be over-reaching just a bit (we writers are shameless!). But this new technique of creating depth maps, called CoDAC (short for “Compressive Depth Acquisition Camera”), is intriguing nonetheless.

I came across the above paragraph in an article put together MIT’s news arm, and it does a great job of getting you excited about this new technology’s capabilities and possibilities. Essentially, using commercial-off-the-shelf technology, these MIT researchers have built upon the time-of-flight principle of 3D data acquisition and created a very inexpensive way to get depth information. Sort of.

When you navigate to the team’s home page (they’re the Signal Transformation and Information Representation Group), you’ll find lots of great information about what they’re up to, including this part of the FAQ:

7. What are the challenges in making CoDAC work?

There are several challenges to making CoDAC work. The most important challenge comes from the fact that measurements do not give linear combinations of scene depths. For this reason, standard compressed sensing techniques do not apply. The light signal measured at the photodetector is a superposition of the time-shifted and attenuated returns corresponding to the different points in the scene. However, extracting the quantities of interest (distances to various scene points) is difficult because the measured signal parameters nonlinearly encode the scene depths. Since we integrate all the reflected light from the scene, this nonlinearity worsens with the number of scene points that are simultaneously illuminated. Without a novel approach to interpreting and processing the measurements, little useful information can be extracted from the measurements. The superposition of scene returns at the single detector results in complete loss of spatial resolution.

Hmmm. “Little useful information can be extracted from the measurements.” That would seem to be a hurdle for most of you working with commercial applications of acquiring 3D data. They also can only currently create depth maps for scenes where all the objects are basically flat, which is again quite a limiter.

Still, they’re not saying it’s a finished product by any means and the size and cost efficiencies they’ve created are incredibly tantalizing. This is certainly important work worthy of following.

How does it all work? Best to let the MIT brain do the explaining: